Artificial intelligence (AI) is rapidly transforming various aspects of our lives, from healthcare to finance. However, the potential for bias embedded within AI systems presents significant ethical and societal challenges. This guide provides a thorough exploration of AI bias, its sources, detection methods, and mitigation strategies. Understanding and addressing bias is crucial for ensuring fairness and equity in AI applications.

This guide delves into the intricacies of AI bias, examining its multifaceted nature and the profound implications it has on various sectors. It will cover the different forms of bias, the crucial role of data in shaping AI models, and the practical steps to identify and mitigate bias in AI systems.

Defining AI Bias

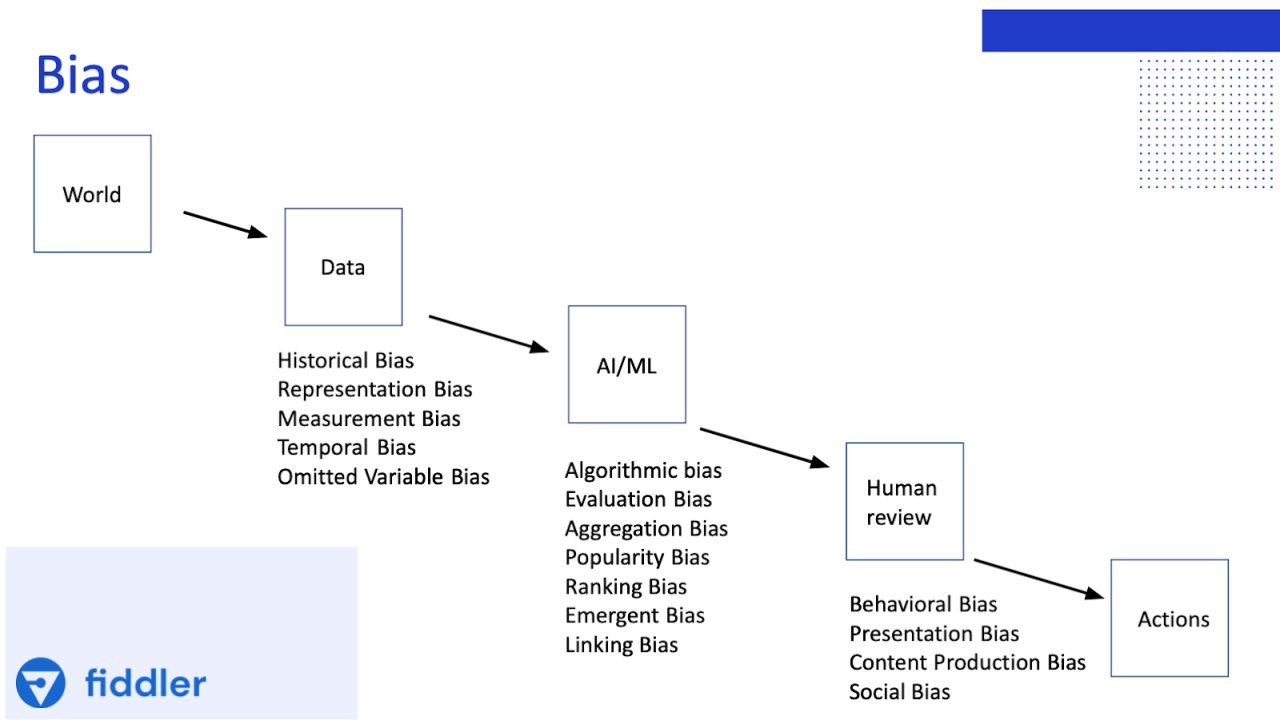

Artificial intelligence (AI) systems, while often lauded for their objectivity, can inherit and amplify biases present in the data they are trained on. This is fundamentally different from human bias, which is often influenced by conscious or subconscious motivations and experiences. AI bias, instead, arises from the inherent limitations and characteristics of the data and algorithms used to create the AI model.

Understanding these biases is crucial for developing fair and equitable AI systems.AI bias manifests in various forms, each with distinct implications. Data bias, for example, is introduced when the training data itself reflects existing societal prejudices or inequalities. Algorithmic bias occurs when the algorithms used to process and interpret the data perpetuate or exacerbate these biases. Selection bias arises from the way data is gathered or selected for training, potentially leading to skewed or incomplete representations of the population.

Each of these biases can have significant downstream effects, impacting the fairness and reliability of AI-powered applications.

Data Bias

Data bias is a critical concern in AI development. Biased datasets can reflect existing societal prejudices or inequalities. For example, if a loan application dataset disproportionately features applications from a specific demographic group with a lower approval rate, the AI model trained on this data might perpetuate these inequalities, potentially leading to discriminatory loan decisions. This bias arises from the source and representation of the data itself.

Algorithmic Bias

Algorithms used in AI systems can also introduce bias. For instance, if an algorithm used to assess criminal risk is trained on data that shows higher recidivism rates among certain demographics, it might unfairly label individuals from these groups as higher risks. This is not necessarily a reflection of any inherent difference between individuals but rather a consequence of the bias inherent in the data on which the algorithm was trained.

This bias is embedded in the decision-making process of the AI.

Selection Bias

Selection bias occurs when the data used to train an AI model isn’t representative of the broader population. If a particular dataset used for image recognition primarily features images of people with certain skin tones, the resulting model might perform poorly on images of people with other skin tones. This is a result of the limited range of data and how it was selected.

Consequences of AI Bias in Application Areas

The consequences of AI bias can be severe in various application areas. In loan applications, biased AI models could perpetuate existing financial inequalities, limiting access to credit for certain demographics. In criminal justice, biased AI systems could lead to disproportionate arrests or sentencing for certain groups. In healthcare, biased AI models could lead to incorrect diagnoses or treatment recommendations, potentially impacting patient outcomes.

Table of AI Bias Types and Examples

| Type of Bias | Description | Real-World Scenario |

|---|---|---|

| Data Bias | Training data reflects existing societal biases. | A loan application dataset with a higher percentage of rejected applications from a particular ethnic group. |

| Algorithmic Bias | Algorithms perpetuate or exacerbate existing biases. | A criminal risk assessment algorithm that predicts higher recidivism rates for individuals from certain racial backgrounds. |

| Selection Bias | Data used for training is not representative of the broader population. | An image recognition system trained primarily on images of light-skinned individuals, resulting in poor performance on images of individuals with darker skin tones. |

Sources of AI Bias

AI models, trained on vast datasets, can inherit and amplify biases present in those datasets. Understanding the origins of these biases is crucial for developing fairer and more equitable AI systems. The biases embedded in the training data, design choices, and development processes can have significant real-world consequences, affecting various aspects of society.

Bias in Training Datasets

The foundation of any AI model is its training data. If this data reflects existing societal inequalities, the model will inevitably perpetuate and even amplify those biases. For example, if a facial recognition system is trained primarily on images of light-skinned individuals, its accuracy may be significantly lower for individuals with darker skin tones. This is because the model has learned to identify features associated with a particular group but may not be able to generalize well to other groups.

Biased data can manifest in various forms, including gender, race, socioeconomic status, and geographic location.

Historical Data and Perpetuation of Bias

Historical data often contains inherent biases. If an AI model is trained on historical crime data, it may reflect existing societal biases and stereotypes. For instance, if arrest records disproportionately target certain communities, the model may learn to associate those communities with higher crime rates, leading to discriminatory outcomes in predictive policing or other applications. The model may not differentiate between historical trends and the realities of a changing society.

This can lead to reinforcing existing societal inequalities.

Bias in Design and Development

The design and development process can also introduce biases into AI models. If the developers themselves hold implicit biases, these biases can subtly influence the design choices and the evaluation metrics used to assess the model’s performance. For instance, a model designed to assess loan applications might inadvertently favour applicants from specific demographics, reflecting the implicit biases of the developers.

This can lead to biased outcomes, such as denying loans to qualified individuals from underrepresented groups.

Relationship Between Data Characteristics and Bias Risk

The following table illustrates the potential correlation between data characteristics and the risk of introducing bias in AI models.

| Data Characteristic | Potential Bias | Mitigation Strategies |

|---|---|---|

| Imbalance in representation of different groups | Models may underperform or misclassify certain groups | Data augmentation, oversampling, or careful selection of representative data |

| Presence of outdated or incomplete data | Models may perpetuate outdated or inaccurate stereotypes | Data cleaning, updating, and incorporation of recent data sources |

| Data collection method bias | Models may inherit the biases of the data collection process | Employing diverse data collection methods, ensuring inclusivity, and validating data quality |

| Data annotation bias | Models may inherit the biases of human annotators | Using diverse annotators, validating annotations, and establishing clear guidelines |

| Lack of diversity in the development team | Models may reflect the biases of the development team | Promoting diversity and inclusivity in the development team |

Identifying AI Bias in Action

Detecting and mitigating bias in AI systems is crucial for ensuring fairness and equitable outcomes. Understanding how AI models exhibit bias requires a multifaceted approach, examining not only the data used to train the models but also the algorithms themselves and the specific outputs generated. Methods for identifying bias in action are diverse and often involve analyzing model predictions and outputs against established metrics of fairness and equity.Identifying bias in AI systems is an iterative process.

It often requires revisiting the dataset, re-evaluating the model architecture, and re-examining the outputs against various benchmarks. Analyzing the outputs for potential discriminatory outcomes is a key step in this process. Furthermore, the methods employed to evaluate AI models must be robust and comprehensive.

Methods for Detecting AI Bias in Different AI Systems

Various methods are available to detect AI bias in different AI systems. These methods vary based on the type of AI system and the nature of the bias being investigated. A key aspect of identifying bias involves evaluating the model’s outputs against established metrics of fairness and equity.

- Statistical Analysis: Statistical methods, such as t-tests and ANOVA, can be used to compare performance metrics across different demographic groups. For example, if a facial recognition system shows a higher error rate for individuals from a particular racial group, statistical analysis can help determine if this difference is statistically significant or simply due to random chance.

- Comparative Analysis: Comparing the performance of the AI system across different subgroups helps identify potential biases. For instance, comparing the loan approval rates of individuals from different income brackets can highlight disparities in the model’s output.

- Qualitative Analysis: Examining the model’s outputs for patterns or inconsistencies can reveal potential bias. For example, if a sentiment analysis model consistently misinterprets negative statements from one demographic group, this could indicate a bias in the model’s training data.

Evaluating AI Models for Fairness and Equity

Evaluating fairness and equity in AI models involves using metrics and benchmarks to assess the model’s performance across different demographic groups. This ensures that the model’s outputs are not disproportionately impacting specific groups. Fairness and equity are paramount in ensuring that AI systems do not perpetuate or exacerbate existing societal inequalities.

- Disparate Impact: This method examines whether the AI system’s output affects different groups disproportionately. A significant difference in the rate of a specific outcome (e.g., loan approvals) for different demographic groups can indicate disparate impact.

- Equal Opportunity: This involves ensuring that the AI system treats all demographic groups equally. For example, if a hiring system consistently favors one demographic group, this could suggest a violation of equal opportunity principles.

- Predictive Parity: This ensures that the AI model’s predictions have similar accuracy across different demographic groups. If the model’s accuracy varies significantly between groups, it might be indicative of bias in the data or the model itself.

Analyzing Model Outputs for Potential Discriminatory Outcomes

Analyzing model outputs is a critical step in identifying potential discriminatory outcomes. This process often involves examining the model’s predictions for patterns that suggest unfair or biased treatment of certain groups. Such analysis can reveal hidden biases and contribute to developing more equitable AI systems.

- Identifying Patterns: Carefully examine model outputs for consistent patterns or trends across different demographic groups. For instance, if a model used for loan applications consistently denies loans to individuals from a specific socioeconomic background, this warrants further investigation.

- Comparing Predictions: Comparing predictions made by the model for different groups can reveal disparities. For example, if a model used for determining insurance premiums shows significantly higher premiums for individuals from a particular geographic area, this difference could be a result of bias.

- Using Interpretability Tools: Leveraging interpretability tools can help explain the reasons behind a model’s decisions. This allows for a more nuanced understanding of the model’s output and potential biases.

Procedure for Testing Bias in an Image Recognition Model

This procedure Artikels steps to test for bias in an image recognition model used for identifying objects in images.

- Data Collection: Gather a diverse dataset of images representing different categories and demographic groups. Ensure the data is balanced and representative of the target population.

- Model Training: Train the image recognition model using the collected dataset. Monitor the model’s performance metrics and ensure they are consistent across different groups.

- Bias Detection: Employ methods like statistical analysis and comparative analysis to detect potential bias in the model’s predictions. Specifically, evaluate the model’s accuracy and precision for different groups represented in the dataset.

- Output Analysis: Analyze the model’s outputs for potential discriminatory outcomes, particularly focusing on the accuracy and precision in identifying objects for different demographic groups. Compare prediction rates for different groups to determine if the model is unfairly misclassifying or failing to recognize objects in images of certain groups.

- Iteration and Refinement: Iterate on the model and data collection process based on the identified biases. Re-train the model with adjustments to address the detected bias, and re-evaluate the model’s performance.

Mitigating AI Bias

Addressing bias in AI systems is crucial for ensuring fairness and equitable outcomes. Effective mitigation strategies involve careful attention to data quality, algorithm design, and system auditing. By proactively identifying and rectifying biases, we can foster trust and responsible AI development.Developing unbiased AI systems requires a multi-faceted approach. This involves not only understanding the sources of bias within the data and algorithms but also implementing techniques to reduce or eliminate these biases.

This proactive approach is vital for building ethical and trustworthy AI.

Strategies for Reducing Bias in Training Datasets

Careful data collection and meticulous cleaning are essential steps in mitigating bias in AI training datasets. Incomplete or skewed data can introduce undesirable biases into the model.

- Diverse and Representative Data Collection: Ensuring a dataset accurately reflects the diversity of the population it aims to serve is critical. This includes collecting data from various demographics, backgrounds, and experiences. For example, if an AI system is being trained to assess loan applications, the dataset should include applications from individuals of different races, genders, and socioeconomic backgrounds.

Collecting data from a broad spectrum of individuals will help reduce the risk of skewed data representing only a particular subset of the population.

- Data Cleaning and Preprocessing: Data cleaning involves identifying and correcting inconsistencies, errors, and missing values within the dataset. This process is vital for removing inaccuracies that could perpetuate bias. For instance, identifying and correcting biased labels in image datasets for facial recognition is crucial. Similarly, ensuring consistent formatting and handling of data from various sources is vital for preventing bias.

Data preprocessing techniques, such as normalization and standardization, can also reduce the impact of certain biases.

- Addressing Bias in Labels: Biased labels within datasets can significantly influence the AI system’s output. If the data labels themselves reflect societal biases, the AI model will inevitably perpetuate these biases. For example, in image recognition, ensuring that images are labeled consistently and without implicit bias is essential.

Techniques for Designing Fair and Unbiased Algorithms

Designing fair and unbiased algorithms is a critical aspect of responsible AI development. A crucial element is incorporating fairness constraints directly into the algorithm’s design.

- Fairness-Aware Algorithm Design: Developing algorithms that explicitly consider fairness criteria during training can mitigate bias. This involves incorporating fairness constraints into the optimization process. For example, fairness-aware machine learning algorithms can be designed to ensure that a loan application approval rate is consistent across different demographic groups.

- Algorithmic Transparency and Explainability: Transparency in the decision-making process is crucial for understanding how algorithms arrive at their conclusions. This helps in identifying and rectifying potential biases. By explaining how algorithms arrive at decisions, we can better understand their behavior and identify any biases present. This approach also fosters trust in AI systems.

Auditing AI Systems to Identify and Address Bias

Regular audits of AI systems are necessary to detect and address potential biases. These audits should cover data, algorithms, and outputs.

- Bias Detection Tools and Techniques: Using appropriate tools and techniques to detect bias in AI systems is crucial. These tools can help identify patterns and anomalies that might indicate bias. For example, algorithmic bias detection tools can analyze the model’s outputs to identify potential fairness issues.

- Bias Mitigation Strategies: After bias detection, the next step is to implement effective mitigation strategies. These strategies can range from re-training the model with modified data to incorporating fairness constraints into the algorithm’s design. For instance, if a bias is found in the facial recognition system’s accuracy, the dataset used to train the model could be adjusted or rebalanced.

Also, the model’s architecture can be modified to improve fairness.

Improving Fairness in Specific AI Applications

Addressing bias in specific applications like facial recognition requires tailored approaches.

- Facial Recognition: In facial recognition systems, bias can manifest in misidentification rates across different demographics. To address this, the dataset used to train the model needs to be carefully curated to reflect a more diverse population. Also, the algorithm’s design needs to be carefully evaluated to identify any biases. Moreover, regular audits can help identify and mitigate potential bias.

Ethical Considerations

The presence of bias in AI systems raises profound ethical concerns, demanding careful consideration across various sectors. The potential for perpetuating existing societal inequalities, leading to discrimination and unfair outcomes, necessitates a proactive approach to mitigate these risks. Understanding the ethical implications and developing strategies for responsible AI development and deployment are paramount.AI systems, if not carefully designed and monitored, can exacerbate existing societal biases.

The algorithms used to train these systems can inadvertently mirror and amplify prejudices present in the data they are trained on, leading to unfair or discriminatory outcomes. Recognizing this risk and actively working to address it are essential for building trust and ensuring fairness in the application of AI.

Ethical Implications in Various Sectors

AI bias can manifest across diverse sectors, each with unique ethical considerations. In healthcare, biased AI systems might lead to misdiagnosis or unequal access to treatment, impacting patient outcomes. In the criminal justice system, biased algorithms could contribute to discriminatory sentencing or profiling, potentially undermining due process and fairness. In employment, biased AI tools might lead to hiring discrimination or unequal opportunities, exacerbating existing inequalities in the workforce.

Financial institutions using biased AI could lead to unequal lending practices and access to financial resources, further widening the gap between different socioeconomic groups. This broad impact necessitates a nuanced understanding of the ethical challenges in each sector.

Societal Impact of Biased AI Systems

Biased AI systems can have a profound and far-reaching impact on society. Unequal access to resources, diminished opportunities, and reinforced stereotypes are just a few of the potential consequences. These systems can perpetuate and amplify existing societal inequalities, leading to a feedback loop that hinders progress towards a more equitable society. Furthermore, the erosion of trust in institutions and the potential for social unrest can result from the perceived unfairness of biased AI systems.

Examples of Discrimination and Inequality

Examples of how AI bias can lead to discrimination and inequality are abundant. A facial recognition system trained primarily on images of light-skinned individuals might perform poorly on identifying darker-skinned individuals, leading to misidentification and potential bias in law enforcement. Similarly, an algorithm used to assess loan applications might discriminate against individuals from certain socioeconomic backgrounds, denying them access to crucial financial resources.

These examples highlight the potential for AI to exacerbate existing societal inequalities.

Responsibility of Developers and Users

Developers and users of AI systems bear a significant responsibility in addressing AI bias. Developers should prioritize fairness and equity in the design and development process, actively seeking out and mitigating potential biases in their data and algorithms. Users should be aware of the potential for bias and critically evaluate the outputs of AI systems, ensuring their use aligns with ethical principles.

Transparency and accountability are crucial for fostering trust and mitigating potential harm.

Ethical Frameworks for Evaluating AI Bias

Different ethical frameworks provide diverse perspectives on evaluating AI bias. A comparison of these frameworks helps illuminate the multifaceted nature of the challenge.

| Framework | Key Concepts | Strengths | Weaknesses |

|---|---|---|---|

| Utilitarianism | Maximizing overall well-being and minimizing harm. | Focuses on the broader impact of AI systems. | Can overlook individual rights and potential for injustice. |

| Deontology | Adhering to moral duties and principles. | Provides a strong basis for fairness and justice. | Can be inflexible in complex situations. |

| Virtue Ethics | Focusing on the character and motivations of individuals involved. | Promotes responsible development and use of AI. | Can be subjective and lack clear guidelines. |

| Justice and Equity | Promoting fairness, equality, and inclusivity. | Directly addresses the problem of bias in AI systems. | Requires careful consideration of competing interests and values. |

Case Studies of AI Bias

Understanding and addressing AI bias requires examining real-world examples of its negative impact. Analyzing these case studies allows us to identify patterns, develop effective mitigation strategies, and ultimately build more equitable AI systems. This section delves into specific instances where AI bias manifested and the subsequent corrective actions taken.

A Case Study: Loan Application Bias

AI-powered loan application systems, designed to automate the evaluation process, have been implicated in discriminatory lending practices. One instance involved an algorithm that exhibited a significant bias against loan applications submitted by individuals from specific demographic groups. The algorithm, trained on historical data, inadvertently learned and amplified existing societal biases, leading to disproportionately lower approval rates for certain applicant groups.

Bias Identification and Addressing

The bias was identified through a comprehensive analysis of the algorithm’s output, comparing approval rates across different demographic groups. Statistical analyses revealed a stark disparity in approval rates, significantly lower for applicants belonging to particular minority groups. The disparity was statistically significant, exceeding the expected variance based on factors like credit history and income. This analysis prompted an investigation into the algorithm’s training data.

Rectifying the Situation and Preventing Future Occurrences

To rectify the situation, the developers re-evaluated the training dataset, identifying and removing historical biases. They also introduced new features and metrics to assess applicants’ financial situations more objectively. Furthermore, an audit process was implemented to monitor the algorithm’s output for any potential biases in real-time. This included introducing fairness constraints into the algorithm’s design. These constraints ensured that the model would not discriminate against any demographic group in the future.

This approach prevented future discriminatory practices.

Real-World Examples of AI Bias and Corrective Actions

Numerous real-world AI systems have demonstrated biases. Facial recognition systems, for example, have shown inaccuracies in identifying individuals with darker skin tones. Corrective actions include retraining the algorithms on more diverse datasets and incorporating techniques to mitigate bias in the facial recognition models. In hiring processes, algorithms designed to predict job performance have been found to perpetuate gender bias.

Addressing this involved incorporating blind resume screening techniques, allowing hiring managers to assess candidates without demographic information.

Examples of AI Systems Exhibiting Bias

- Criminal Justice: Risk assessment tools used in criminal justice have shown bias against minority groups. This was addressed by incorporating additional variables to the models and recalibrating their parameters, focusing on a more balanced evaluation of risk factors.

- Job Applications: Algorithms used in recruitment have been found to discriminate against women and minorities in some contexts. Corrective actions include using blind resume screening and incorporating diversity training for the hiring teams.

- Image Recognition: Facial recognition systems have exhibited difficulty identifying individuals with darker skin tones. Mitigation strategies involve expanding the datasets used for training to include more diverse populations and incorporating bias mitigation techniques during algorithm design.

Tools and Techniques for Bias Detection

Identifying bias in AI models is crucial for building fair and reliable systems. A variety of tools and techniques are available, each with strengths and limitations. Careful consideration of these factors allows for the selection of the most appropriate method for a given application. This section explores various methods and their suitability for detecting bias in AI models, with a focus on implementation in a healthcare context.

Statistical Methods for Bias Detection

Statistical methods are fundamental for analyzing the distribution of data and identifying potential biases. These methods often involve examining disparities in model performance across different demographic groups. For instance, comparing the accuracy of a model when applied to different patient subgroups can reveal systematic biases.

- Disparate Impact Analysis: This method compares the rates of favorable outcomes (e.g., successful treatment) for different demographic groups. A significant difference suggests potential bias. A limitation is that it doesn’t necessarily pinpoint the cause of the disparity; it only identifies the presence of bias. For example, if a loan application model approves fewer applications from minority groups, disparate impact analysis can highlight this difference, but it doesn’t explain why this is happening.

- Regression Analysis: Analyzing the relationship between model predictions and protected attributes (e.g., race, gender) can reveal if the model is unfairly influenced by these factors. This approach allows for the identification of the specific features contributing to the bias. A limitation is that it can be complex to interpret, requiring careful consideration of potential confounding factors. For instance, in predicting patient survival, if a model exhibits a statistically significant association between survival rates and gender, further investigation is required to understand if the association is a direct effect of gender or is confounded by other variables like age or disease severity.

- Variance Analysis: This method focuses on examining the variance in model predictions for different demographic groups. A significant difference in variance could indicate bias, especially if it’s associated with a protected attribute. The limitation lies in interpreting the variance in a complex model. For instance, if the variance in predicted hospital readmission rates is significantly higher for patients of a specific ethnicity, this might signal bias, but the root cause needs to be further explored.

Machine Learning-Based Methods for Bias Detection

These methods utilize machine learning algorithms to identify patterns and anomalies in data that suggest bias.

- Algorithmic Auditing Tools: These tools analyze the model’s decision-making process to identify points where biases might be introduced. These tools often leverage techniques like feature importance analysis and visualization to expose bias in the model. A limitation is the need for specialized expertise to interpret the results. For example, an auditing tool might highlight that a model frequently misclassifies patients from a specific region, indicating a potential bias that needs further investigation.

- Bias-Aware Model Training: This approach modifies the training process to explicitly minimize bias. It can involve adjusting the loss function or adding constraints to the model to prevent bias from being learned. A limitation is that the design of these techniques can be quite complex and not readily available for all models.

Implementing Bias Detection in Healthcare AI

In healthcare, implementing bias detection requires careful consideration of the specific data and models used. For instance, in a model predicting the likelihood of a patient needing a particular treatment, bias detection methods can be applied to identify if the model’s predictions are systematically different for various demographic groups.

- Data Collection and Preparation: Ensure data includes relevant protected attributes. Carefully review and clean the data to handle missing values or outliers that might affect the results.

- Selection of Appropriate Methods: Choose statistical or machine learning-based methods that align with the specific type of bias you’re concerned about and the characteristics of the model.

- Interpretation of Results: Carefully analyze the results to identify potential biases and determine whether they are statistically significant.

Future Trends in Addressing AI Bias

The ongoing development of AI systems necessitates a proactive approach to mitigating bias. Emerging research and development efforts are crucial for building fairer and more equitable AI models. Future trends will focus on incorporating fairness and equity considerations into the entire design and development lifecycle, rather than as an afterthought.

Emerging Research and Development Efforts

Advancements in AI fairness research are driving the development of novel techniques to identify and mitigate bias in algorithms. Researchers are exploring novel methods of data preprocessing, model architecture modifications, and evaluation metrics. These methods aim to improve the accuracy and reliability of bias detection while also ensuring the fairness and transparency of AI systems. A key area of focus involves creating algorithms that can adapt to changing societal norms and data distributions, rather than relying on static datasets.

Forecast of Future Bias Detection and Mitigation Techniques

Future trends in bias detection and mitigation will likely involve more sophisticated and automated approaches. These techniques will move beyond simple statistical analyses to incorporate more nuanced understanding of societal factors. For example, researchers are exploring methods that can identify biases in datasets by analyzing the relationships between different attributes. Furthermore, there’s an increasing focus on developing explainable AI (XAI) techniques, allowing users to understand why a particular decision was made by an AI system.

This transparency is crucial for building trust and fostering confidence in AI systems.

Incorporating Fairness and Equity into the Design and Development Lifecycle

Integrating fairness and equity into the AI design and development lifecycle requires a paradigm shift. It’s crucial to involve diverse stakeholders in the process from the outset, considering their perspectives and experiences. This collaborative approach ensures that AI systems reflect the values and needs of the diverse populations they will serve. For instance, when designing a loan application system, developers should involve representatives from marginalized communities to understand their specific needs and potential biases.

Areas for Future Research and Development

Improved bias detection methods remain a key area of focus. Research efforts should concentrate on creating more robust and reliable methods that can identify subtle and complex biases. Furthermore, there’s a need for techniques to quantify the impact of bias on various populations and evaluate the effectiveness of mitigation strategies. A significant focus will also be placed on the development of AI models that can learn from and adapt to changing societal contexts.

This adaptability is essential to ensure the long-term fairness and equity of AI systems. Examples of this include examining the impact of AI-driven hiring tools on diverse applicant pools, or analyzing the bias embedded in facial recognition software for different ethnicities.

Final Summary

In conclusion, this guide has presented a detailed analysis of AI bias, emphasizing the importance of understanding its origins, manifestations, and the crucial steps for mitigation. The responsibility for developing and deploying unbiased AI rests with both developers and users. By actively addressing bias through careful data analysis, algorithm design, and continuous evaluation, we can move towards creating more ethical and equitable AI systems for the future.