AI chatbots are rapidly becoming a primary source of information, but their responses aren’t always accurate. This guide provides a crucial framework for evaluating the reliability of AI-generated content, ensuring users can discern truth from falsehoods. From understanding how AI constructs its answers to recognizing manipulative tactics, this comprehensive approach equips you to navigate the complexities of AI-based information with confidence.

This guide delves into the essential steps for verifying the authenticity and credibility of information from AI chatbots. It covers diverse aspects, including analyzing the source’s reliability, assessing the validity of data and statistics, scrutinizing images and multimedia, and understanding the potential for bias. By mastering these techniques, you can effectively use AI tools while mitigating the risk of misinformation.

Understanding AI-Generated Information

AI chatbots, powered by large language models, are becoming increasingly sophisticated at generating human-like text. They excel at answering questions, crafting stories, and even translating languages. However, it’s crucial to understand the limitations of these systems and how they arrive at their conclusions, to effectively evaluate the information they provide.AI chatbots generate responses by analyzing vast amounts of text data.

They learn patterns and relationships within this data, allowing them to predict the most likely words or phrases to follow in a given context. This process, often referred to as “predictive text generation,” is not based on a deep understanding of the world in the way humans do. Instead, it’s a statistical process that identifies correlations in the input data.

How AI Chatbots Generate Responses

AI chatbots utilize complex algorithms to process and generate text. These algorithms analyze vast datasets of text and code, identifying patterns and relationships between words and phrases. They then use these patterns to predict the most likely next word or phrase in a sequence. This predictive modeling allows the chatbot to construct coherent and often human-like responses to user queries.

Importantly, these algorithms don’t truly understand the meaning or context of the text; rather, they identify statistical probabilities.

Potential for Inaccuracy and Misinformation

AI chatbots are susceptible to generating inaccurate or misleading information due to the limitations of their training data and the inherent nature of predictive text generation. The quality of the information they provide depends significantly on the quality and comprehensiveness of the data they were trained on. If the training data contains biases or inaccuracies, the chatbot will likely reflect these flaws in its responses.

Common Types of Errors

AI chatbots can make various errors in their responses. These include:

- Hallucinations: AI chatbots sometimes fabricate information that is not present in the training data. This fabricated information might seem plausible but is completely false. This occurs when the algorithm predicts a likely sequence of words, but the connection between those words is not grounded in reality.

- Bias: The training data often reflects societal biases. As a result, AI chatbots can unintentionally perpetuate these biases in their responses, leading to unfair or discriminatory outcomes. For example, if the training data disproportionately portrays men in leadership roles, the chatbot might be more likely to recommend men for managerial positions in subsequent responses.

- Lack of context: AI chatbots might struggle to understand the nuances of complex questions or statements. They may provide a response that is technically correct but misses the underlying context or intent of the user’s request.

- Oversimplification: AI chatbots may oversimplify complex topics, failing to capture the intricacies and subtleties of the information. This can lead to a misrepresentation of the subject matter.

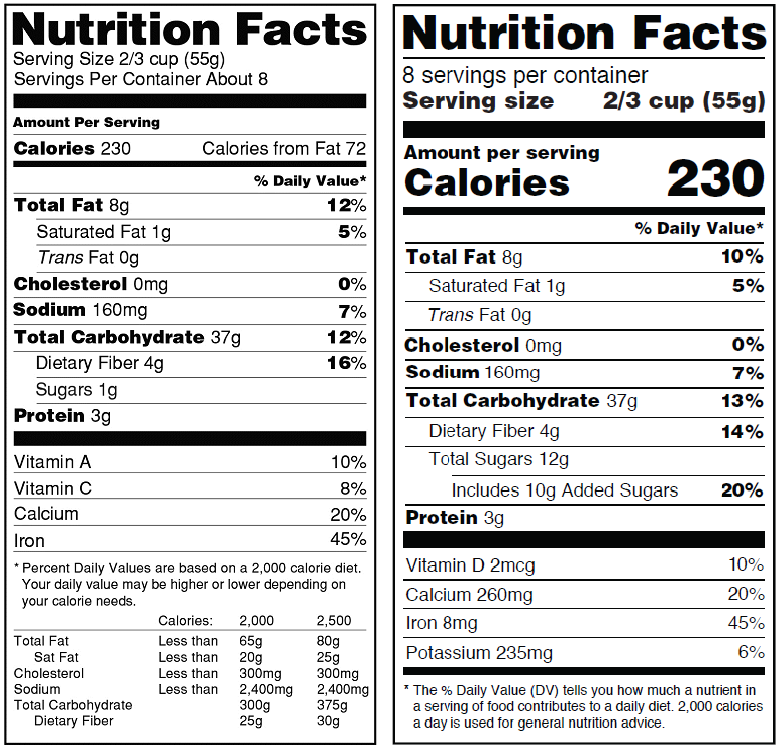

Comparing AI Chatbot Platforms

The accuracy of different AI chatbot platforms varies significantly. A comparison table highlighting key features and performance metrics can aid in evaluating different platforms.

| Platform | Accuracy (estimated) | Strengths | Weaknesses |

|---|---|---|---|

| Platform A | 85% | Strong on factual questions, good at summarizing information. | Tends to oversimplify complex topics, occasional hallucinations. |

| Platform B | 78% | Excellent at creative writing, good at generating different perspectives. | Lower accuracy on factual questions, prone to bias. |

| Platform C | 92% | Highly accurate on factual queries, strong in technical domains. | Limited in creative writing, might struggle with nuanced questions. |

Note: Accuracy estimations are based on various evaluations and benchmarks, and may vary depending on the specific query.

Evaluating the Source

Assessing the reliability of information from AI chatbots is crucial for responsible use. Understanding the source’s potential biases and limitations is paramount to forming informed judgments. This involves identifying key indicators of reliability, evaluating the plausibility of presented information, recognizing unreliable sources, and appreciating the importance of cross-referencing information.Evaluating the source of information is a critical step in determining its trustworthiness, especially when dealing with AI-generated content.

It’s important to consider factors such as the chatbot’s training data, the methodology used for generating the response, and any potential biases present in the data. Plausibility, cross-referencing, and source reliability are essential for informed decision-making.

Key Indicators of a Chatbot’s Reliability

Identifying a chatbot’s reliability requires careful examination of its source and response methodology. Factors to consider include the training data’s comprehensiveness and currency, the algorithm’s design and potential biases, and the chatbot’s ability to cite sources. A chatbot trained on a limited or outdated dataset may present inaccurate or outdated information. Likewise, an algorithm prone to bias may produce skewed or prejudiced results.

- Data Source and Currency: A chatbot’s reliability hinges on the quality and timeliness of its training data. A chatbot trained on a dataset lacking diverse perspectives or containing outdated information may present skewed or inaccurate results. For example, a chatbot trained primarily on information from a single news source may consistently present a biased viewpoint. The more diverse and up-to-date the training data, the higher the probability of reliability.

- Response Methodology: Understanding how the chatbot generates responses is crucial. A chatbot that directly cites its sources is more trustworthy than one that doesn’t. Furthermore, the presence of algorithms prone to bias in the chatbot’s response methodology can lead to misleading information.

- Source Citations: Reliable chatbots should be able to cite sources for their claims. This allows users to independently verify the information presented. Lack of citations or vague references are strong indicators of unreliability.

Plausibility of Information

Determining the plausibility of information from an AI chatbot involves a critical evaluation process. Assessing the context, comparing it with known facts, and looking for inconsistencies are key steps. If a claim contradicts well-established knowledge or appears too good to be true, it warrants further scrutiny. For example, a claim about a scientific breakthrough without supporting evidence from reputable sources should be treated with caution.

- Contextual Analysis: Assess the information within its context. Does the claim fit the overall narrative or discussion? Look for inconsistencies or illogical connections. For example, if a chatbot claims a new medical treatment cures all diseases, evaluate the context of the claim against established medical knowledge. A claim lacking sufficient supporting evidence should be approached with skepticism.

- Comparison with Known Facts: Compare the information with established facts and verified information. If a chatbot presents information that contradicts well-established knowledge, it should be scrutinized closely. For example, if a chatbot claims the Earth is flat, this should be viewed with significant skepticism given the overwhelming scientific consensus.

- Identification of Inconsistencies: Look for inconsistencies within the information itself. If a chatbot presents contradictory claims or information that doesn’t logically follow, it should be treated with suspicion. For example, a chatbot that states a product is both highly effective and has no side effects warrants close scrutiny.

Unreliable or Biased Information Sources

Recognizing unreliable or biased sources is vital for avoiding misinformation. Chatbots trained on biased data, those with a vested interest in promoting a particular viewpoint, or those lacking transparency about their source data are examples of unreliable sources.

- Biased Data: Chatbots trained on biased datasets can perpetuate and amplify existing biases. This can lead to skewed or prejudiced information. For example, a chatbot trained primarily on information from a specific political party might consistently present a partisan viewpoint.

- Vested Interests: Chatbots created or controlled by organizations with a vested interest in a particular outcome may present biased information to advance their agenda. For example, a chatbot created by a company promoting a specific product might consistently present a positive view of that product.

- Lack of Transparency: Chatbots that do not clearly disclose their source data or methodology are unreliable. Lack of transparency makes it difficult to assess the reliability and potential biases in the information presented.

Verifying Information from Multiple Sources

Verification from multiple sources is essential for determining the accuracy and reliability of information. Cross-referencing claims with reputable sources, such as academic journals, news articles, and government reports, can help determine if a claim is supported by credible evidence.

- Cross-referencing: Always cross-reference information from a chatbot with other reputable sources. This practice helps confirm accuracy and identify potential biases. For instance, if a chatbot states a particular historical event occurred in a specific way, compare that information with multiple historical accounts from diverse sources.

Summary Table

| Characteristic | Reliable Chatbot Response | Unreliable Chatbot Response |

|---|---|---|

| Source Data | Diverse, up-to-date, and verifiable | Limited, outdated, or biased |

| Methodology | Transparent and clearly explained | Opaque or unclear |

| Source Citations | Present and readily accessible | Absent or vague |

| Plausibility | Consistent with known facts | Contradictory or illogical |

| Bias | Minimized and acknowledged | Significant and unacknowledged |

Fact-Checking Techniques

AI chatbots can provide a wealth of information, but users must critically evaluate the data presented. Effective fact-checking strategies are crucial for discerning accurate and reliable information from potentially misleading or inaccurate chatbot responses. This section details practical methods to verify AI-generated content.

Verifying Information from AI Chatbots

Careful scrutiny of AI chatbot responses is paramount. Begin by identifying the specific claims or statements within the chatbot’s output. Next, evaluate the source’s reliability and potential biases. Consider the context in which the information was presented. If the chatbot’s response cites a source, investigate that source independently to assess its credibility.

Cross-Referencing with Trusted Sources

Cross-referencing AI chatbot information with established, reliable sources is essential. This process involves consulting reputable news outlets, academic journals, government websites, and other authoritative sources. If the chatbot’s claims align with the information from trusted sources, it strengthens the credibility of the chatbot’s response. Conversely, discrepancies necessitate further investigation. For instance, if a chatbot states a specific statistic about global temperatures, verifying it against data from NASA or NOAA is critical.

Using Search Engines for Validity Assessment

Employing search engines to assess the validity of chatbot claims is a valuable technique. Conduct searches using s or phrases directly from the chatbot’s output. Review the results, considering the number of reputable sources supporting the claim and the overall consensus of information. If conflicting information emerges, scrutinize the evidence presented by each source. A chatbot claim supported by a significant portion of reputable sources is more likely to be accurate.

Examples of Fact-Checking Tools and Resources

Numerous tools and resources can aid in fact-checking AI-generated information. Fact-checking websites, such as Snopes and PolitiFact, offer a wealth of resources for verifying claims. Independent journalism organizations often provide comprehensive analyses of information, including that generated by AI chatbots. These resources can offer insights into potential biases, inaccuracies, or misleading information.

Fact-Checking Websites and Focus Areas

| Fact-Checking Website | Focus Area |

|---|---|

| Snopes | Debunking urban legends, rumors, and misinformation across various topics. |

| PolitiFact | Evaluating the accuracy of political claims and statements. |

| FactCheck.org | Assessing the accuracy of factual claims, particularly in the political sphere. |

| Reuters | Providing verified news reports and fact-checking information across a broad range of topics. |

| AFP | Verifying news reports, analyses, and opinions, including those generated by AI chatbots. |

Recognizing Bias and Manipulation

AI chatbots, while powerful tools, can inherit and amplify biases present in the data they are trained on. This inherent bias can manifest in their responses, potentially leading to skewed or misleading information. Understanding how these biases operate and how to detect them is crucial for responsible use of these technologies. This section explores the mechanisms of bias in AI chatbot responses, strategies for manipulation, and methods for discerning biased patterns.AI chatbots are trained on massive datasets of text and code.

If these datasets reflect societal biases, the chatbots will likely perpetuate and even amplify those biases. For instance, if a dataset predominantly features male voices in leadership roles, the chatbot might consistently portray men as more likely to hold positions of power, creating a skewed representation of gender roles. This section details strategies for recognizing and mitigating these biases.

How Biases Influence AI Chatbot Responses

Bias in AI chatbot responses stems from the data used to train them. If the training data contains stereotypes, prejudices, or discriminatory language, the chatbot will likely reflect those patterns in its own outputs. This can manifest in various forms, including skewed portrayals of certain groups, perpetuation of harmful stereotypes, and reinforcement of existing societal inequalities. For example, a chatbot trained on news articles from a specific region might display a biased perspective on global events, failing to represent diverse viewpoints.

Techniques Used to Manipulate or Mislead Through AI

AI chatbots can be exploited to create misleading or manipulative content. This can involve using sophisticated language patterns to subtly influence opinions, or even fabricating entirely false information while maintaining a semblance of credibility. These techniques can be particularly insidious because they can appear natural and persuasive, making it difficult to discern truth from falsehood. Techniques such as creating convincing but false narratives or using emotionally charged language are commonly employed.

For example, an AI chatbot could be used to generate convincing but fabricated quotes from historical figures or present fabricated news stories.

Detecting Patterns of Bias in Chatbot Responses

Identifying bias in chatbot responses requires careful scrutiny of the language used, the information presented, and the overall tone of the conversation. Look for inconsistencies, disproportionate representation of certain groups, and the use of potentially biased language. Pay attention to the chatbot’s answers to a variety of questions, examining how it addresses different groups or topics. Look for recurring themes or patterns that suggest underlying biases.

Also consider the source of the information being presented by the chatbot.

Examples of Biased Information from Chatbots

Examples of biased information from chatbots are numerous and vary widely. A chatbot trained on historical data might portray women as being less influential than men in past centuries. Another example is a chatbot that, when asked about a specific political candidate, consistently uses language that is more positive or negative toward the candidate, depending on the training data.

Another example might be a chatbot presenting a narrow perspective on a current event, failing to account for opposing viewpoints. These examples demonstrate how biases can be embedded in seemingly neutral responses.

Table Illustrating Various Types of Bias and How to Identify Them in Chatbot Text

| Type of Bias | Description | How to Identify | Example |

|---|---|---|---|

| Gender Bias | Unequal or stereotypical portrayal of genders. | Look for disproportionate representation of one gender in specific roles or situations, or use of gendered language. | A chatbot describing historical figures consistently emphasizes the accomplishments of men, while downplaying those of women. |

| Racial Bias | Unequal or stereotypical portrayal of different races. | Look for disproportionate representation of certain racial groups in particular contexts or use of racially charged language. | A chatbot discussing criminal justice statistics disproportionately highlights negative outcomes for a specific racial group. |

| Cultural Bias | Unequal or stereotypical portrayal of different cultures. | Look for generalizations about cultures or nations, or biased descriptions of cultural practices. | A chatbot describing different countries consistently focuses on negative aspects, while overlooking positive ones. |

| Confirmation Bias | The tendency to favor information that confirms existing beliefs. | Look for responses that selectively highlight information supporting a particular viewpoint, while ignoring contradictory evidence. | A chatbot, when asked about climate change, only cites information that minimizes its impact. |

Contextual Analysis

Understanding the context surrounding an AI chatbot’s response is crucial for accurate evaluation. A statement that appears factually correct in isolation might be misleading or entirely inaccurate when considered within its broader context. AI chatbots, while impressive in their ability to generate human-like text, are not equipped with the same nuanced understanding of the world as humans. They rely on patterns and relationships in the vast datasets they are trained on, and the quality of their responses hinges significantly on the quality and representativeness of the training data.Evaluating information within its context requires careful consideration of the surrounding details.

This includes understanding the specific question asked, the overall conversation history, and any relevant background information. Simply extracting a phrase from a chatbot’s response and evaluating it in isolation risks overlooking crucial nuances that might dramatically alter its meaning or validity. By analyzing the full context, we can determine if the response is appropriate for the given circumstances and if it aligns with established knowledge.

Importance of Contextual Understanding

Accurate interpretation of AI-generated information depends heavily on comprehending the specific circumstances surrounding the statement. A statement’s meaning can shift dramatically based on the question it answers, the surrounding discussion, and the specific details of the situation being addressed. A response that is perfectly accurate within a particular context might be entirely irrelevant or even misleading in another.

Methods for Evaluating Accuracy within Context

Several methods can enhance the accuracy of evaluating information within its context. First, scrutinize the question itself. A poorly formulated or ambiguous question can lead to an inaccurate or misleading response. Second, examine the conversational history. Previous statements can offer valuable insights into the context of the current response.

Third, consider the background knowledge related to the subject matter. This can help determine if the response aligns with established facts and theories. Finally, look for inconsistencies or contradictions within the response itself or in relation to the overall conversation. These inconsistencies can signal potential errors or biases.

Role of Background Knowledge in Assessing Chatbot Information

Background knowledge plays a vital role in assessing chatbot information. When evaluating a chatbot’s response, having a solid understanding of the topic allows for a more thorough evaluation. This includes knowing relevant facts, concepts, and theories related to the subject. If the response contradicts established knowledge, this signals a potential error in the chatbot’s reasoning. Furthermore, familiarity with common biases and fallacies in reasoning can help identify manipulative or misleading responses.

Identifying Inconsistencies and Contradictions

Inconsistencies and contradictions within a chatbot’s response or between different responses can indicate errors in its reasoning or biases in its training data. Careful scrutiny of the chatbot’s statements for internal contradictions or conflicts with the conversation’s history is essential. For instance, a chatbot might state that a specific event occurred in one way in one response and in a completely different way in another, highlighting a possible inconsistency.

Similarly, a response that contradicts well-established facts or theories should raise immediate concerns about its accuracy.

Examples of Context Affecting Validity

Consider these examples illustrating how context significantly impacts the validity of a chatbot’s statement:

- A chatbot might state that “the Earth is flat” in response to a query about the shape of the Earth. However, if the conversation had previously established a playful, fictional scenario, the statement might be part of that narrative and not a factual claim.

- A chatbot might suggest a specific medical treatment in response to a user’s description of symptoms. The validity of this suggestion is heavily dependent on the user’s description being complete and accurate, and whether the chatbot was given proper context regarding potential allergies or other conditions.

- A chatbot might claim that “a specific stock will double in value within the next year.” The statement’s validity relies heavily on the context, including the economic climate, the company’s performance, and other relevant factors. Such predictions are inherently risky and should not be considered a guarantee.

Analyzing Data and Statistics

AI chatbots frequently present data and statistics to support their claims. Critically evaluating this information is crucial to ensure accuracy and avoid misinformation. This involves understanding how the data was collected, the potential biases inherent in the data, and the methods used to analyze it. The validity of the statistics presented by an AI chatbot is directly linked to the reliability of the underlying data and the appropriateness of the analytical methods employed.

Assessing Data Presented by AI Chatbots

Evaluating data presented by AI chatbots requires careful scrutiny. The first step is to determine the source of the data. Is it a reputable dataset, a published study, or a less reliable source? The source’s reputation and methodology directly impact the data’s trustworthiness. Consider the data’s context within the overall conversation.

Does the data presented align with the chatbot’s previous statements and the overall discussion?

Evaluating the Source of Presented Data

Determining the source of the data is paramount. Look for explicit citations or references within the chatbot’s response. If citations are lacking, the data’s reliability is significantly diminished. The source’s reputation is crucial. Has the dataset been peer-reviewed?

Is the organization or individual behind the data known for accuracy and impartiality? For example, a report from a reputable governmental agency carries more weight than a blog post from an unknown individual.

Scrutinizing Statistical Claims Made by Chatbots

Statistical claims require meticulous scrutiny. AI chatbots may present percentages, averages, or other statistical measures. Look for explanations of how the statistics were calculated. Are the methods used appropriate for the data? Are there any potential biases in the data collection or analysis?

Consider the sample size used. A small sample size can lead to inaccurate conclusions. A study with a large, representative sample is generally more reliable. For instance, a survey of 1000 people is more trustworthy than a survey of 20.

Potential Pitfalls and Errors in Data Presentation

AI chatbots may unintentionally or intentionally present data in misleading ways. Look for potential biases in the selection of data points. Have specific data points been highlighted or omitted to support a particular narrative? Are the statistics presented in a way that oversimplifies complex issues? Be cautious of cherry-picking data.

Ensure the presented data encompasses the full scope of the issue, not just the parts that support a specific conclusion. For instance, if a chatbot is discussing global poverty, look for data that encompasses all regions and not just those with low poverty rates.

Steps for Evaluating the Validity of Data and Statistics

| Step | Action |

|---|---|

| 1 | Identify the source of the data. |

| 2 | Evaluate the reputation and methodology of the source. |

| 3 | Scrutinize the statistical methods used. |

| 4 | Check for potential biases in data selection and presentation. |

| 5 | Assess the sample size and representativeness of the data. |

| 6 | Compare the presented data to other reliable sources. |

Assessing Images and Multimedia

AI chatbots often incorporate images and other multimedia to support their responses. Assessing the authenticity and context of this multimedia is crucial for evaluating the chatbot’s information. Misinformation can be easily disseminated through manipulated images or videos, and recognizing these manipulations is a vital skill for discerning reliable information. Understanding how to evaluate the source, context, and potential manipulation of multimedia is essential for responsible information consumption.

Verifying Image Authenticity

Determining the authenticity of images presented by AI chatbots requires careful scrutiny. Images can be easily manipulated, and what appears to be a genuine photograph may have been altered. The methods used to verify the authenticity of an image often depend on the type of image.

Methods for Detecting Manipulation

A variety of methods can be used to assess the authenticity of images. These methods range from simple visual inspection to more advanced techniques.

- Visual Inspection: Careful observation of the image for inconsistencies can reveal potential manipulation. Look for pixelation, blurring, or unusual color gradients. Examine the image for signs of digital alteration, such as unnatural lighting, unnatural perspective, or inconsistencies in image elements.

- Reverse Image Search: Using reverse image search engines allows you to find instances where the image has been used before. If the image is frequently used or found on other websites with similar content, it may indicate a possible source or suggest manipulation. This helps to assess if the image is unique or has been duplicated.

- Metadata Analysis: Image metadata, which includes information like the date and time of creation, the camera model, and the software used, can offer clues about the image’s origin and authenticity. Examining the metadata can help determine if the image was taken at a time that makes sense given the context of the chatbot’s response.

- Image Forensics Tools: Specialized tools are available to analyze images in more detail. These tools can identify signs of manipulation such as photo editing software used, watermarks, or inconsistencies in the image’s structure.

Evaluating Context and Source

The context of the image and the source from which it originates are equally important factors in determining its authenticity. An image appearing out of context or from a dubious source may raise immediate questions about its reliability.

- Source Credibility: Assess the reputation and trustworthiness of the website or platform presenting the image. Look for signs of bias or a history of spreading misinformation.

- Image Context: Examine the image’s relevance to the chatbot’s response. If the image appears out of place or irrelevant, it may be a sign of manipulation or an attempt to mislead the user.

- Look for Confirmation: Seek corroboration from other reliable sources. This helps to verify the information presented in conjunction with the image.

Examples of Manipulated Images

- Misleading Headlines: An image of a natural disaster might be used to illustrate a news story about an unrelated event, creating a misleading connection.

- Historical Misrepresentation: A photograph from a different era might be manipulated to create a false impression about a historical event.

- Deepfakes: Deepfake technology can create highly realistic but fabricated videos and images. These can be used to spread misinformation or impersonate individuals.

- Photoshopped Images: Simple photo editing tools can be used to alter or manipulate images to fit a particular narrative.

Methods for Verifying Image Authenticity (Table)

| Method | Description | Example |

|---|---|---|

| Visual Inspection | Identifying inconsistencies in lighting, colors, or objects. | A photo with unusually bright lighting or unrealistic color balance. |

| Reverse Image Search | Finding other instances of the image online. | Finding the image on multiple news articles about the same event. |

| Metadata Analysis | Examining the image’s creation date, camera model, and other details. | A photo claiming to be from 1950 but has metadata from 2023. |

| Image Forensics Tools | Using specialized software to detect manipulation. | Tools to detect inconsistencies in pixel density or alterations in the image’s composition. |

Summary

In conclusion, this comprehensive guide equips users with the necessary skills to navigate the often complex world of AI-generated information. By understanding how AI constructs its responses, evaluating sources, recognizing biases, and analyzing data and multimedia, users can make informed decisions and avoid potentially misleading content. This empowers users to utilize AI chatbots effectively while maintaining critical thinking and accuracy.