Harnessing the power of AI tools presents exciting possibilities, but ethical considerations are paramount. This comprehensive guide delves into the responsible implementation of AI, equipping users with the knowledge and strategies to leverage these powerful tools while mitigating potential risks.

From understanding the capabilities and limitations of various AI tools to identifying potential ethical pitfalls, this guide provides a structured approach to navigating the complex landscape of AI. It covers crucial aspects such as data privacy, bias detection, and the ongoing need for transparency and accountability.

Understanding AI Tools and Their Capabilities

Artificial intelligence (AI) tools are rapidly transforming various sectors, from creative industries to scientific research. Understanding these tools’ diverse capabilities and potential limitations is crucial for responsible use. This section delves into the different types of AI tools, their functionalities, and the potential biases they may exhibit.AI tools are now ubiquitous, automating tasks and providing insights in ways previously unimaginable.

From generating images and summarizing text to crafting code, these tools are revolutionizing how we work and interact with information. Understanding their inner workings and potential pitfalls is key to leveraging their power ethically.

Types of AI Tools

Various AI tools exist, each designed for specific tasks. These tools vary greatly in their complexity and the data they require. This section explores some key types.

- Image Generation Tools: These tools can create realistic images from text descriptions. They are used for generating visual content, such as illustrations, graphic designs, and even photorealistic images. These tools can be valuable for artists, designers, and researchers. However, they can sometimes produce images that are not entirely accurate or that may reflect biases present in the training data.

- Text Summarization Tools: These tools condense large amounts of text into shorter summaries. They are useful for quickly understanding articles, reports, and other lengthy documents. However, these tools may not always capture the nuances of the original text or may omit important details, depending on the training data and the complexity of the input.

- Code Generation Tools: These tools assist in writing code for software applications. They can help developers automate tasks and improve code quality. However, the generated code may not always be optimal or efficient, and there’s a risk of introducing bugs or security vulnerabilities if not carefully reviewed by a human developer.

AI Tool Capabilities and Limitations

Different AI tools have varying strengths and weaknesses. Their potential benefits must be balanced against their limitations, particularly concerning accuracy, bias, and ethical considerations.

| Tool Type | Example | Use Case | Potential Bias |

|---|---|---|---|

| Image Generation | DALL-E 2, Stable Diffusion | Creating images from text prompts, generating variations of existing images, artistic exploration | May reflect biases in training data, potentially perpetuating stereotypes in generated images. May favor specific styles or aesthetics. |

| Text Summarization | Summarizer, QuillBot | Condensing articles, reports, news stories, legal documents | May omit key details or misinterpret the context, potentially leading to inaccurate summaries. Can reflect biases in the source data. |

| Code Generation | GitHub Copilot, Tabnine | Automating code writing, generating code snippets, suggesting improvements | May introduce bugs or security vulnerabilities if not reviewed by a human developer. May reflect biases in the training data, potentially leading to suboptimal code solutions. |

How AI Tools Work (Simplified)

AI tools, at their core, rely on complex algorithms trained on massive datasets. These algorithms learn patterns and relationships from the data, enabling them to perform tasks such as image generation, text summarization, and code creation. Think of it as teaching a computer to recognize and apply learned patterns.

“AI tools learn from patterns in massive datasets, allowing them to perform tasks without explicit programming.”

Identifying Potential Ethical Risks

Harnessing the power of AI tools presents exciting opportunities, yet it also necessitates careful consideration of potential ethical pitfalls. Understanding and proactively mitigating these risks is crucial for responsible AI development and deployment. This section delves into common ethical concerns, past misuses, and potential societal impacts to foster a more informed discussion surrounding AI ethics.

Common Ethical Concerns

The widespread adoption of AI tools brings forth a multitude of ethical considerations. These concerns often stem from the potential for AI systems to perpetuate existing biases, disseminate misinformation, and disrupt traditional employment models. Addressing these concerns head-on is essential for ensuring the responsible development and deployment of AI.

- Bias in AI Systems: AI models are trained on data, and if this data reflects societal biases, the AI system can perpetuate and even amplify these biases. For instance, facial recognition systems trained primarily on images of lighter-skinned individuals might perform less accurately on darker-skinned individuals, leading to discriminatory outcomes. This bias can manifest in various applications, from loan applications to criminal justice systems.

- Misinformation and Manipulation: AI tools can be employed to generate realistic but fabricated content, including text, images, and audio. This ability to create convincing fakes raises serious concerns about the spread of misinformation and manipulation. Deepfakes, for example, are synthetic media that can be used to fabricate false statements or create misleading impressions, posing significant risks to individuals and society.

- Job Displacement: Automation driven by AI could lead to job displacement across various sectors. While AI may create new jobs, the potential for significant job losses necessitates careful consideration of retraining and upskilling programs to mitigate the negative economic and social consequences.

Examples of Past AI Misuse

A critical step in anticipating future ethical challenges is examining past incidents where AI tools have been misused or have caused harm. These historical examples underscore the importance of proactive ethical considerations in AI development.

- Algorithmic Bias in Lending: Some loan applications have employed AI systems that exhibited bias towards certain demographic groups. This led to unequal access to credit, highlighting the need for careful scrutiny and fairness in AI-driven lending practices. For example, in certain instances, loan applications that utilized AI systems disproportionately denied loans to minority applicants.

- Misinformation Campaigns: Sophisticated AI tools can be used to generate and disseminate fake news and propaganda, creating significant challenges for media literacy and democratic processes. These campaigns can undermine trust in established institutions and incite societal division.

Potential Societal Impacts of Widespread AI Adoption

Widespread adoption of AI tools could have profound societal impacts, touching on various aspects of daily life. Proactive measures are necessary to ensure a beneficial future for society.

- Economic Disparities: The uneven distribution of AI-driven economic benefits could exacerbate existing economic inequalities, widening the gap between the wealthy and the less privileged. Careful policies and initiatives are needed to ensure that AI-driven advancements benefit all members of society.

- Erosion of Privacy: The increasing reliance on AI for data collection and analysis raises concerns about privacy violations. Robust regulations and ethical guidelines are necessary to balance the benefits of AI with the protection of individual privacy.

Potential Ethical Dilemmas and Mitigating Strategies

The following table Artikels potential ethical dilemmas that might arise from AI tool usage and suggests corresponding mitigating strategies.

| Ethical Dilemma | Mitigating Strategy |

|---|---|

| Bias in AI Systems | Develop diverse and representative datasets for training AI models, implement fairness-aware algorithms, and conduct regular audits for bias detection. |

| Misinformation and Manipulation | Develop and promote media literacy, establish fact-checking mechanisms, and invest in technologies to detect and combat deepfakes. |

| Job Displacement | Implement proactive retraining and upskilling programs, support the development of new jobs in AI-related fields, and explore alternative economic models to mitigate the impact of job displacement. |

Implementing Responsible Use Practices

Leveraging AI tools ethically requires careful consideration of their practical application. This involves understanding the potential impact on individuals and society, and proactively mitigating potential harms. We must prioritize data privacy, security, and responsible use practices throughout the entire lifecycle of AI development and deployment.The responsible use of AI tools extends beyond simply building and deploying them. It necessitates a commitment to ethical considerations, transparency, and accountability at every stage, from data collection to output evaluation.

This ensures that AI benefits society while minimizing potential risks.

Data Privacy and Security Considerations

Protecting user data is paramount when using AI tools. Strong encryption methods and access controls are essential to safeguard sensitive information. Data anonymization and pseudonymization techniques can further enhance privacy, ensuring that personal data is not directly linked to individuals. Data security policies should be clearly articulated and regularly reviewed to adapt to emerging threats. Robust procedures for data breaches should be in place, and user consent protocols must be transparent and unambiguous.

Guidelines for Responsible Data Collection and Usage

Data collection should adhere to strict ethical guidelines. Explicit consent must be obtained from individuals before collecting their data. Data collection practices should be transparent, clearly explaining how the data will be used. Bias in datasets must be meticulously identified and mitigated to prevent unfair or discriminatory outcomes. Data should be collected only for the specific purpose Artikeld to the user, and appropriate measures for data retention and deletion must be in place.

Data should be used only in alignment with the user’s consent and within legal frameworks.

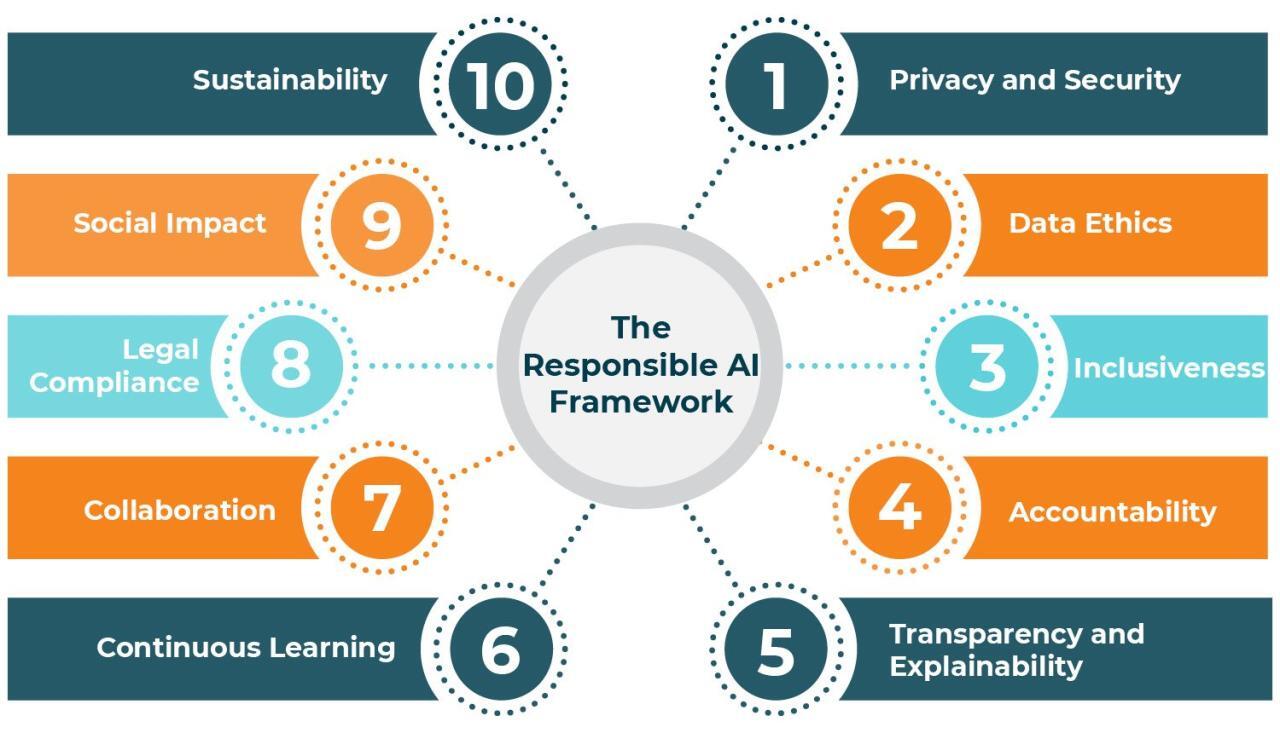

Principles for Ethical AI Tool Development and Deployment

Establishing a set of ethical principles is crucial for guiding AI tool development and deployment. These principles should include:

- Transparency and Explainability: AI systems should be designed with transparency in mind, allowing users to understand how the system arrives at its conclusions. Black box algorithms should be avoided where possible, and the rationale behind AI decisions should be demonstrably clear.

- Fairness and Non-Discrimination: AI systems must be designed to avoid bias and ensure equitable outcomes for all users. Algorithms should be regularly audited to identify and mitigate potential biases in the data or the algorithms themselves. Careful attention to the representation of diverse populations in training data is essential.

- Accountability and Responsibility: Clear lines of accountability must be established for the development, deployment, and use of AI tools. Individuals and organizations responsible for the design, implementation, and oversight of AI systems should be held accountable for their actions.

- Privacy and Security: Data privacy and security should be paramount throughout the lifecycle of AI systems. Robust security measures and data protection protocols should be implemented to safeguard user data and prevent unauthorized access.

Evaluating Accuracy and Reliability of AI Outputs

Evaluating the accuracy and reliability of AI outputs is critical for ensuring trustworthy AI systems. Testing should include diverse and representative datasets, and outputs should be critically examined for potential biases, errors, or inconsistencies.

- Validation Techniques: Rigorous testing and validation procedures are necessary to establish the accuracy and reliability of AI outputs. These procedures should include techniques like cross-validation, A/B testing, and comparisons with human experts.

- Monitoring and Feedback Mechanisms: Systems should include mechanisms to monitor AI performance in real-world scenarios and gather user feedback. This feedback should be used to identify and address issues, and continuously improve the accuracy and reliability of the system.

- Continuous Improvement: AI systems should be designed for continuous improvement, allowing for the incorporation of new data and feedback to refine their performance over time.

Promoting Transparency and Accountability

Promoting transparency and accountability is crucial for building trust in AI systems and ensuring their responsible use. Openness about how AI tools operate, coupled with mechanisms for holding developers and users accountable, fosters a culture of ethical development and application. This approach is vital to mitigating potential harms and maximizing the benefits of AI technologies.Understanding how AI tools function, including their limitations and potential biases, is essential for evaluating their impact and implementing appropriate safeguards.

A transparent approach allows stakeholders to understand the decision-making processes behind AI systems and enables meaningful scrutiny. This understanding is essential to identify and mitigate potential biases, fostering fairness and equitable outcomes.

Importance of Transparency in AI Tool Operations

Transparency in AI tool operations is paramount. Users should have clear insight into the functioning of AI systems, enabling them to understand how decisions are made. This involves providing clear explanations of the algorithms used, the data employed, and the potential limitations of the technology. A lack of transparency can lead to a loss of trust, and prevent users from effectively assessing the outputs of the AI tool, making it difficult to identify potential biases or errors.

Understanding how an AI tool works allows for more informed decision-making and helps in identifying potential risks.

Identifying and Mitigating Bias in AI Algorithms

Bias in AI algorithms can lead to unfair or discriminatory outcomes. Identifying and mitigating these biases is a critical step in ensuring responsible AI development and use. This involves careful analysis of the data used to train the algorithms and the algorithms themselves. The algorithms should be designed to avoid perpetuating existing societal biases, and the data should be carefully curated to minimize bias.

Techniques for bias detection and mitigation, such as fairness-aware machine learning methods and diverse datasets, can help. For instance, an AI system used for loan applications could inadvertently discriminate against certain demographic groups if the training data reflects historical biases. Addressing this bias through diverse and representative data can lead to fairer and more equitable outcomes.

Framework for Evaluating Potential Impact of AI Tools on Various Groups

A robust framework for evaluating the potential impact of AI tools on various groups is essential. This involves considering how different stakeholders, including individuals, communities, and organizations, might be affected by the deployment of AI tools. The framework should assess potential risks, such as discrimination, privacy violations, and job displacement. A structured evaluation approach helps to anticipate and mitigate potential negative impacts and promote equitable outcomes.

For example, an AI system designed to automate customer service interactions should be evaluated for potential impacts on customer experience, job displacement for human agents, and accessibility for individuals with disabilities.

Role of Regulations and Standards in Ensuring Ethical AI Use

Regulations and standards play a vital role in promoting ethical AI use. Clear guidelines and regulations can help to ensure that AI systems are developed and deployed responsibly. These regulations should address issues such as data privacy, algorithmic transparency, and accountability for AI-driven decisions. Examples include data protection laws, which help to safeguard individual privacy, and guidelines for algorithmic fairness, which help to mitigate bias.

These regulations provide a crucial framework for governing the use of AI tools and help to promote ethical and responsible development and deployment. Standards for data quality and algorithmic transparency can also enhance the trustworthiness of AI systems.

Fostering Dialogue and Education

Cultivating a culture of ethical AI necessitates open dialogue and accessible education. This involves not only informing individuals about the potential risks and benefits of AI but also empowering them to engage in informed discussions and contribute to responsible development and deployment. Through shared understanding and collaborative efforts, we can ensure that AI tools are used in a way that aligns with societal values and promotes human well-being.

Examples of Successful Ethical AI Initiatives

Numerous organizations and individuals are actively promoting ethical AI practices. These initiatives demonstrate the importance of proactively addressing ethical considerations throughout the entire AI lifecycle. By learning from successful examples, we can refine our strategies and implement effective solutions.

- The development of ethical guidelines by tech companies like Google and Microsoft for responsible AI development and deployment illustrates a commitment to incorporating ethical considerations into their AI strategies. These guidelines often address bias detection and mitigation, data privacy, and transparency.

- Academic institutions are leading initiatives that foster research and education in AI ethics, offering courses and workshops that equip students and professionals with the necessary knowledge and skills to develop and deploy AI responsibly. This proactive approach empowers future generations to tackle the ethical challenges associated with AI.

- Civil society organizations, such as the Partnership on AI, play a crucial role in advocating for ethical AI standards and policies. They engage in public discussions, conduct research, and provide resources to promote the responsible use of AI. These organizations help ensure that the public discourse on AI ethics is well-informed and inclusive.

Resources for Learning More About AI Ethics

Numerous resources are available to deepen understanding of AI ethics. These resources span various formats and target different audiences, providing a comprehensive learning experience.

- Academic journals and publications dedicated to AI ethics offer in-depth analyses of ethical challenges and best practices. These resources provide a foundation for understanding the complex issues surrounding AI development and deployment.

- Online courses and workshops provide practical training and knowledge-sharing opportunities. Platforms such as Coursera and edX offer courses on AI ethics, allowing individuals to gain valuable insights and develop expertise in this critical area.

- Books and articles dedicated to AI ethics explore different facets of this field, providing a diverse range of perspectives and fostering a comprehensive understanding of the challenges and opportunities associated with AI.

Key Stakeholders Involved in Promoting Ethical AI Use

A wide range of stakeholders are vital to ensuring the ethical development and implementation of AI. Their collaborative efforts are essential for creating a robust and responsible AI ecosystem.

- Technology companies play a crucial role in shaping ethical AI practices by incorporating ethical considerations into their development processes and adhering to established standards.

- Government agencies can establish regulations and policies to guide the development and deployment of AI, ensuring its alignment with societal values and safety concerns.

- Researchers contribute to the advancement of AI ethics by conducting research, developing new methodologies, and disseminating knowledge to promote ethical AI practices.

- Civil society organizations advocate for ethical AI principles and promote public awareness, fostering a wider understanding and engagement in discussions regarding AI ethics.

- Academic institutions educate future generations about AI ethics and provide opportunities for research and development, nurturing a workforce equipped to tackle ethical challenges.

- The public plays a crucial role in promoting ethical AI use by engaging in discussions, raising concerns, and demanding accountability from stakeholders.

Methods for Educating the Public About Responsible AI Use

Effective education strategies are crucial for promoting public understanding of AI ethics. These strategies aim to empower individuals with the knowledge and tools necessary to engage in responsible AI discussions and decisions.

- Public awareness campaigns through various media channels, such as social media, television, and radio, can effectively disseminate information about AI ethics. These campaigns should emphasize the importance of responsible AI use and its impact on society.

- Interactive workshops and presentations can provide hands-on experience and facilitate discussions about AI ethics. These events should encourage participation and promote a collaborative learning environment.

- Educational materials, such as brochures, websites, and online tutorials, can offer accessible information on AI ethics to a wider audience. These resources should be tailored to different levels of understanding and address specific concerns.

Case Studies of Ethical AI Use

Examining successful implementations of AI tools demonstrates how responsible development and deployment can mitigate potential risks. These case studies highlight the positive impact of AI when implemented with ethical considerations at its core. By understanding the methodologies used in these examples, we can learn valuable lessons to apply in our own AI projects.

Examples of Responsible AI Applications

Understanding successful AI implementations provides valuable insights into ethical considerations. These examples illustrate how careful planning, transparent processes, and community engagement can lead to beneficial outcomes. The selection of case studies below represents a variety of industries and application types.

| Case Study | Ethical Considerations | Steps Taken for Ethical Use | Comparison of Approaches |

|---|---|---|---|

| AI-powered medical diagnosis tool for diabetic retinopathy detection | Accuracy, bias in data, patient privacy, accessibility of the tool, and potential over-reliance on the tool. |

|

This case study emphasizes the importance of rigorous data quality control, comprehensive testing, and patient privacy protocols. Other AI implementations may prioritize accessibility, user training, or a more collaborative approach with healthcare professionals. |

| AI-driven personalized education platform for students with learning disabilities | Fairness, inclusivity, and individual learning needs. The platform’s potential for perpetuating existing inequalities in educational resources and access. |

|

This approach focuses on tailored learning and continuous improvement. Other applications might prioritize standardized assessments or more general learning outcomes, requiring different ethical considerations. |

| AI-assisted fraud detection system in financial institutions | Fairness in risk assessment, bias in data, and potential for over-reliance on the tool, leading to human error. |

|

The approach prioritizes human oversight and transparent decision-making. Other systems might focus on automation and speed, potentially sacrificing some level of oversight or transparency. |

Future Trends and Considerations

The rapid advancement of AI tools necessitates a proactive and adaptable approach to ethical considerations. Predicting future developments and potential implications is crucial to proactively address emerging challenges and capitalize on opportunities. This section explores anticipated trends in AI technology, potential future regulations, emerging challenges and opportunities, and the ongoing need for dialogue and adaptation.

Future Developments in AI Tool Technology

The trajectory of AI development suggests a continued increase in sophistication and accessibility. Expect more sophisticated natural language processing, enabling AI tools to understand and respond to human language with greater nuance and context. Improvements in computer vision will allow for more accurate and detailed image and video analysis, opening doors to applications in medical diagnostics, autonomous vehicles, and environmental monitoring.

Furthermore, the integration of AI into existing systems will continue, creating more interconnected and intelligent environments. This integration will likely lead to increased automation in various sectors, raising important questions about workforce displacement and the need for retraining and upskilling programs.

Potential Future Regulations or Standards Related to AI Ethics

The development of comprehensive and internationally recognized guidelines for AI ethics is becoming increasingly critical. Expect to see a greater emphasis on transparency and explainability in AI decision-making processes. This could involve standards for data privacy, algorithmic bias detection, and mechanisms for redress when AI systems cause harm. Moreover, future regulations may address the accountability of developers, deployers, and users of AI systems, aiming to establish clear lines of responsibility for the actions and outcomes of AI-driven systems.

The establishment of independent oversight bodies could be a crucial step in ensuring ethical and responsible AI development and deployment. Examples of existing and emerging standards in specific sectors, such as healthcare and finance, can provide valuable insights and inform the development of future regulations.

Potential Emerging Challenges and Opportunities in the Field

The widespread adoption of AI presents both significant opportunities and challenges. Opportunities include advancements in scientific discovery, personalized medicine, and enhanced productivity in various industries. However, challenges such as job displacement, algorithmic bias, and the potential for misuse of AI tools demand careful consideration. The creation of robust ethical frameworks and educational initiatives is crucial for mitigating potential risks and maximizing benefits.

Emerging challenges include the potential for AI systems to be used for malicious purposes, such as deepfakes and targeted misinformation campaigns. Addressing these concerns will require collaboration between policymakers, researchers, and the public.

Ongoing Need for Ongoing Dialogue and Adaptation in the Face of Rapid AI Development

The pace of AI development necessitates a continuous and iterative approach to ethical considerations. Dialogue and collaboration between stakeholders, including researchers, policymakers, industry leaders, and the public, are essential to address the evolving challenges and opportunities. Ongoing adaptation is crucial to ensure that ethical guidelines and regulations remain relevant and effective in the face of rapid technological advancements.

Regular reviews and updates to ethical guidelines are critical to address unforeseen consequences and emerging ethical concerns. The field requires a commitment to ongoing learning, adaptation, and proactive problem-solving to ensure that AI is developed and deployed in a manner that benefits humanity as a whole.

Final Thoughts

In conclusion, responsible AI use necessitates a multifaceted approach encompassing technical proficiency, ethical awareness, and a commitment to ongoing dialogue. By understanding the potential benefits and risks, implementing best practices, and fostering a culture of ethical consideration, we can harness the transformative power of AI while safeguarding its responsible application for the benefit of all.