Natural Language Processing (NLP) is rapidly transforming how we interact with technology. This field, at the intersection of computer science and linguistics, empowers computers to understand, interpret, and generate human language. From simple chatbots to complex language translation systems, NLP is becoming increasingly ubiquitous. This guide delves into the fundamental concepts, core tasks, and key techniques of NLP, providing a clear and concise overview for beginners.

This comprehensive exploration will cover the essential components of NLP, including the core NLP tasks like text classification and sentiment analysis. We will also discuss the critical role of language models, syntax, and semantics in understanding human language. Different NLP techniques, such as tokenization and stemming, will be explained, along with their applications in various sectors. Finally, we will examine the challenges and future trends in NLP, highlighting the potential and limitations of this exciting field.

Introduction to NLP

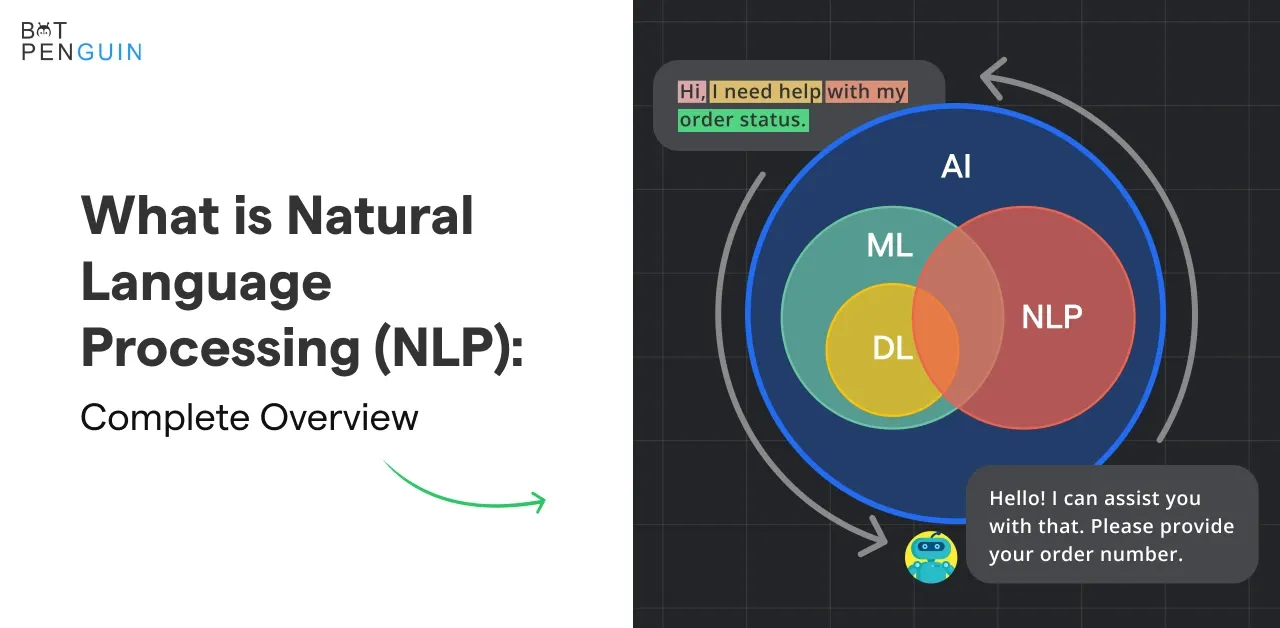

Natural Language Processing (NLP) is a branch of artificial intelligence (AI) focused on enabling computers to understand, interpret, and generate human language. This involves developing algorithms and models that allow computers to process and analyze text and speech data, much like humans do. It’s a rapidly evolving field with significant implications across various industries.The fundamental concepts behind NLP center on the idea of bridging the gap between human communication and computer understanding.

Computers, unlike humans, do not inherently “understand” language in the same way we do. NLP aims to provide a structured method for computers to process and analyze language, extracting meaning and context from text or speech. This involves tasks such as tokenization (breaking down text into individual words), part-of-speech tagging (identifying the grammatical role of words), and sentiment analysis (determining the emotional tone of text).

Definition of NLP

Natural Language Processing (NLP) is a field of computer science focused on enabling computers to process and understand human language. This encompasses various tasks, from basic text analysis to complex language generation.

Fundamental Concepts

NLP relies on several key concepts to achieve its goals. Crucially, computers need a structured way to represent and process language data. This often involves techniques like statistical modeling, machine learning, and deep learning. These approaches allow computers to learn patterns and relationships within language data, enabling them to perform tasks like translation, summarization, and question answering.

Significance of NLP in Today’s World

NLP has become increasingly important in today’s world due to the vast amount of textual and spoken data available. Applications range from simple tasks like spam filtering to complex tasks like medical diagnosis and customer service chatbots. Its significance stems from its ability to automate tasks, extract insights from data, and ultimately, enhance human-computer interaction.

Analogy

Imagine a human translator. They take a sentence in one language, understand its meaning, and translate it into another language. NLP systems function similarly. They take input text (or speech), understand the meaning and context, and then produce output in a desired format (e.g., translated text, summary, answer to a question).

Key Components of NLP

Understanding the core components of NLP is crucial to appreciating its multifaceted nature. These components work together to achieve the desired outcomes.

| Component | Description | Example |

|---|---|---|

| Lexical Analysis | This involves breaking down text into smaller units (like words or phrases) and identifying their grammatical properties. | Identifying nouns, verbs, adjectives, and other parts of speech in a sentence. |

| Syntactic Analysis | This component analyzes the grammatical structure of sentences to determine the relationships between words. | Identifying the subject, verb, and object of a sentence. |

| Semantic Analysis | This focuses on understanding the meaning of words and phrases within the context of a sentence or larger text. | Determining the intended meaning of a sentence, even with ambiguous wording. |

| Discourse Analysis | This component analyzes the relationships between sentences and paragraphs, considering the overall context and flow of the text. | Understanding the connections between different paragraphs in a news article. |

| Pragmatic Analysis | This component interprets the intended meaning and purpose behind a text or utterance, considering the context and speaker’s intent. | Understanding the implied meaning of a sarcastic comment. |

Core NLP Tasks

Natural Language Processing (NLP) encompasses a diverse range of tasks, each aiming to bridge the gap between human language and computer understanding. These tasks range from simple text analysis to complex interactions, enabling machines to perform sophisticated operations on human language. Understanding these tasks is fundamental to grasping the potential and limitations of NLP applications.

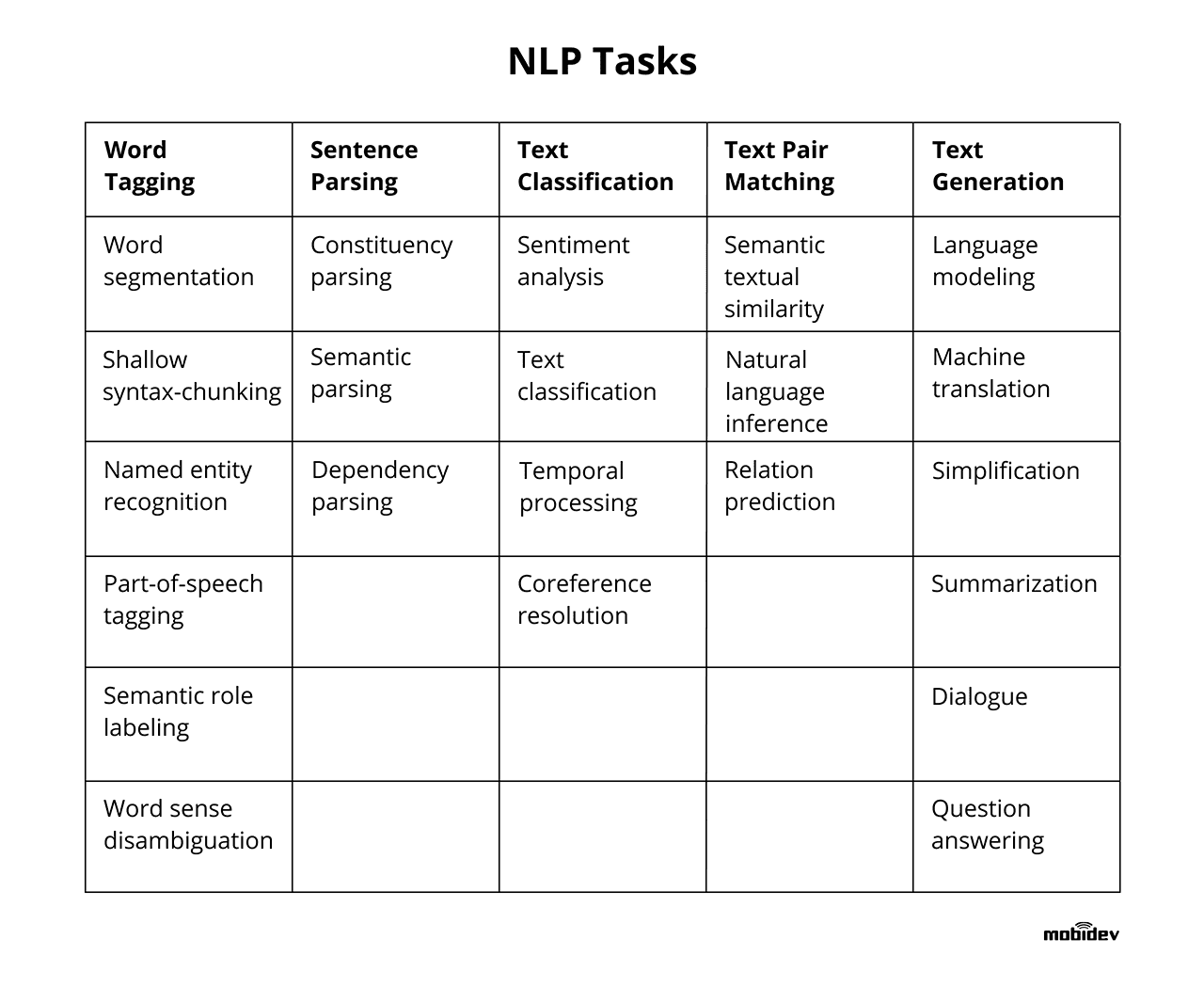

Types of NLP Tasks

NLP tasks are broadly categorized based on the nature of the operation performed on the text. These tasks often rely on various techniques, including machine learning algorithms and statistical models, to achieve their objectives. The specific techniques employed can significantly impact the accuracy and efficiency of the task.

- Text Classification: This task involves categorizing text into predefined classes or categories. Examples include classifying emails as spam or not spam, categorizing news articles into different topics, or identifying the sentiment expressed in a piece of text. Accurate text classification relies on the selection of appropriate features and training data.

- Sentiment Analysis: This task focuses on determining the emotional tone or opinion expressed in a piece of text. It is crucial for understanding public opinion, monitoring brand reputation, and identifying customer satisfaction levels. Sentiment analysis often involves analyzing words and phrases associated with positive, negative, or neutral sentiments.

- Machine Translation: This task involves automatically translating text from one language to another. This has become increasingly important in facilitating communication across different languages and cultures. Machine translation systems often use statistical models or neural networks to learn the relationships between words and phrases in different languages.

- Named Entity Recognition (NER): This task focuses on identifying and classifying named entities in text, such as people, organizations, locations, and dates. This information can be valuable in various applications, such as information retrieval, knowledge extraction, and question answering systems. Common techniques involve pattern matching and machine learning models trained on labeled data.

- Question Answering (QA): This task involves developing systems that can answer questions posed in natural language. These systems typically use NLP techniques to understand the question, retrieve relevant information from a knowledge base, and formulate an appropriate answer. Accuracy relies on both understanding the question and finding the right supporting information.

Comparing NLP Tasks

Different NLP tasks have varying objectives and methodologies. Text classification focuses on assigning categories, sentiment analysis on identifying emotions, and machine translation on converting languages. These tasks differ in complexity and the types of data required for training and evaluation.

- Text Classification: Aims to categorize text into predefined groups. Methodologies include analysis, topic modeling, and machine learning algorithms.

- Sentiment Analysis: Aims to determine the sentiment (positive, negative, or neutral) expressed in text. Methodologies include lexicon-based approaches, machine learning models, and deep learning techniques.

- Machine Translation: Aims to translate text from one language to another. Methodologies include statistical machine translation (SMT) and neural machine translation (NMT).

Steps in a Typical NLP Task

A typical NLP task often involves several key steps:

- Data Collection: Gathering the necessary text data for training and testing the NLP model.

- Data Preprocessing: Cleaning and preparing the data for model training, including tasks like removing irrelevant characters, handling missing values, and converting text to a suitable format.

- Feature Engineering: Identifying and extracting relevant features from the preprocessed data to represent the text for the model.

- Model Training: Using the extracted features to train a suitable machine learning or deep learning model on the prepared data.

- Model Evaluation: Assessing the performance of the trained model using metrics relevant to the specific task (e.g., accuracy, precision, recall for classification).

- Deployment: Deploying the trained model to a real-world application to perform the desired task on new, unseen text data.

Common NLP Tasks and Their Applications

| Task | Description | Example Application |

|---|---|---|

| Text Classification | Categorizing text into predefined classes. | Spam detection, news topic classification, document categorization. |

| Sentiment Analysis | Determining the sentiment expressed in text. | Customer feedback analysis, social media monitoring, brand reputation management. |

| Machine Translation | Translating text from one language to another. | Global communication, language learning, cross-cultural understanding. |

| Named Entity Recognition (NER) | Identifying and classifying named entities in text. | Information retrieval, knowledge extraction, question answering. |

| Question Answering (QA) | Answering questions posed in natural language. | Customer support chatbots, knowledge base systems, information retrieval systems. |

Key Concepts in NLP

Natural Language Processing (NLP) relies on a foundation of key concepts to effectively understand and process human language. These concepts, including language modeling, syntax, semantics, and the challenges of ambiguity, are crucial for building sophisticated NLP systems. This section will delve into these fundamental elements, providing insights into their importance and practical applications.Language understanding in NLP is multifaceted, requiring the ability to model the probabilistic nature of language, analyze grammatical structures, and interpret meaning.

This section will explore the building blocks of NLP, emphasizing how these concepts work together to bridge the gap between human communication and machine comprehension.

Language Modeling

Language modeling is a fundamental aspect of NLP, enabling machines to predict the probability of a sequence of words occurring in a given language. It is crucial for tasks such as text generation, machine translation, and speech recognition. Accurate language models can generate coherent and grammatically correct text, translate sentences with precision, and even transcribe spoken words with minimal errors.

Types of Language Models

Various types of language models exist, each with its own strengths and weaknesses. Understanding these distinctions is vital for selecting the appropriate model for a specific NLP task.

- N-gram models: These models predict the probability of a word based on the preceding n-1 words. They are relatively simple to implement but may struggle with capturing long-range dependencies in language.

- Recurrent Neural Networks (RNNs): RNNs are capable of handling sequential data, allowing them to capture dependencies between words across longer sequences. This makes them suitable for tasks requiring a deeper understanding of context.

- Transformers: Transformers, particularly architectures like BERT and GPT, have revolutionized NLP. They excel at understanding complex relationships between words within a sentence and can capture contextual nuances with impressive accuracy.

Examples and Applications

Language models are employed in a wide array of applications. For example, Google Translate leverages language models to translate text between different languages. Chatbots, such as those found on customer service websites, often use language models to understand user queries and respond appropriately. In the realm of creative writing, language models can generate various text forms, from poems to articles.

Syntax and Semantics

Syntax refers to the grammatical structure of a language, while semantics focuses on the meaning derived from the words and their relationships. Both are critical components of NLP, enabling machines to understand not only the arrangement of words but also the intended meaning. Understanding syntax allows NLP systems to parse sentences correctly, while semantics helps them interpret the true meaning behind the words.

Natural Language Structure and Interpretation

Human language is structured with a complex interplay of syntax and semantics. Understanding this structure is vital for effective NLP. For instance, word order, grammatical structures, and context all contribute to the overall meaning of a sentence. Interpretation often involves identifying the entities and relationships within the sentence, enabling machines to extract useful information.

Handling Ambiguity and Context

Ambiguity in language poses a significant challenge for NLP systems. A single word or phrase can have multiple interpretations, depending on the context. Effective NLP systems must be able to resolve this ambiguity and understand the context to arrive at the intended meaning. For example, “bank” can refer to a financial institution or the side of a river; understanding the surrounding words helps disambiguate the correct meaning.

Language Model Comparison

| Model Type | Strengths | Weaknesses |

|---|---|---|

| N-gram | Simple, computationally efficient | Limited ability to capture long-range dependencies, struggles with rare words |

| RNN | Can capture long-range dependencies | Can be computationally expensive, vanishing gradient problem |

| Transformer | Excellent at capturing context, handling long sequences | Can be computationally expensive, requires significant training data |

NLP Techniques and Methods

Natural Language Processing (NLP) relies on a diverse range of techniques to effectively process and understand human language. These methods, ranging from simple to complex, are crucial for enabling computers to interpret, analyze, and generate human-like text. Understanding these techniques is essential for building robust and accurate NLP applications.

Tokenization

Tokenization is a fundamental NLP technique that breaks down text into individual units, called tokens. These tokens can be words, phrases, or even characters, depending on the specific application and the desired level of granularity. For example, the sentence “The quick brown fox jumps over the lazy dog” might be tokenized into a list of individual words. This process is critical for subsequent analysis because it prepares the text for further processing steps.

Stemming

Stemming aims to reduce words to their root form or stem. This process is useful for reducing the vocabulary size and for grouping semantically related words together. Algorithms like the Porter Stemmer, for instance, apply a set of rules to remove suffixes from words. For example, “running,” “runs,” and “ran” would all be reduced to the stem “run.” This simplification is helpful for tasks such as information retrieval, where similar words should be treated as semantically equivalent.

Lemmatization

Lemmatization is similar to stemming but goes a step further by considering the dictionary form of a word. Instead of just removing suffixes, lemmatization attempts to find the base form (lemma) of a word. This process takes into account grammatical context, ensuring that the result is a valid word. For example, “running” would be lemmatized to “run,” while “better” would be lemmatized to “good.” This more sophisticated approach is often preferred over stemming for applications that require greater accuracy in handling grammatical variations.

Comparison of Techniques

The choice between tokenization, stemming, and lemmatization depends on the specific NLP task. Tokenization is fundamental for all subsequent processing. Stemming is faster but can produce incorrect or nonsensical stems. Lemmatization is generally more accurate but can be computationally more expensive. Consider the trade-offs between speed and accuracy when selecting the most appropriate technique.

Illustrative Table of Techniques

| Technique | Description | Example |

|---|---|---|

| Tokenization | Dividing text into individual units (tokens). | “The quick brown fox” -> [“The”, “quick”, “brown”, “fox”] |

| Stemming | Reducing words to their root form using rules. | “running” -> “run” |

| Lemmatization | Finding the base form (lemma) of a word considering grammatical context. | “better” -> “good” |

Applications of NLP

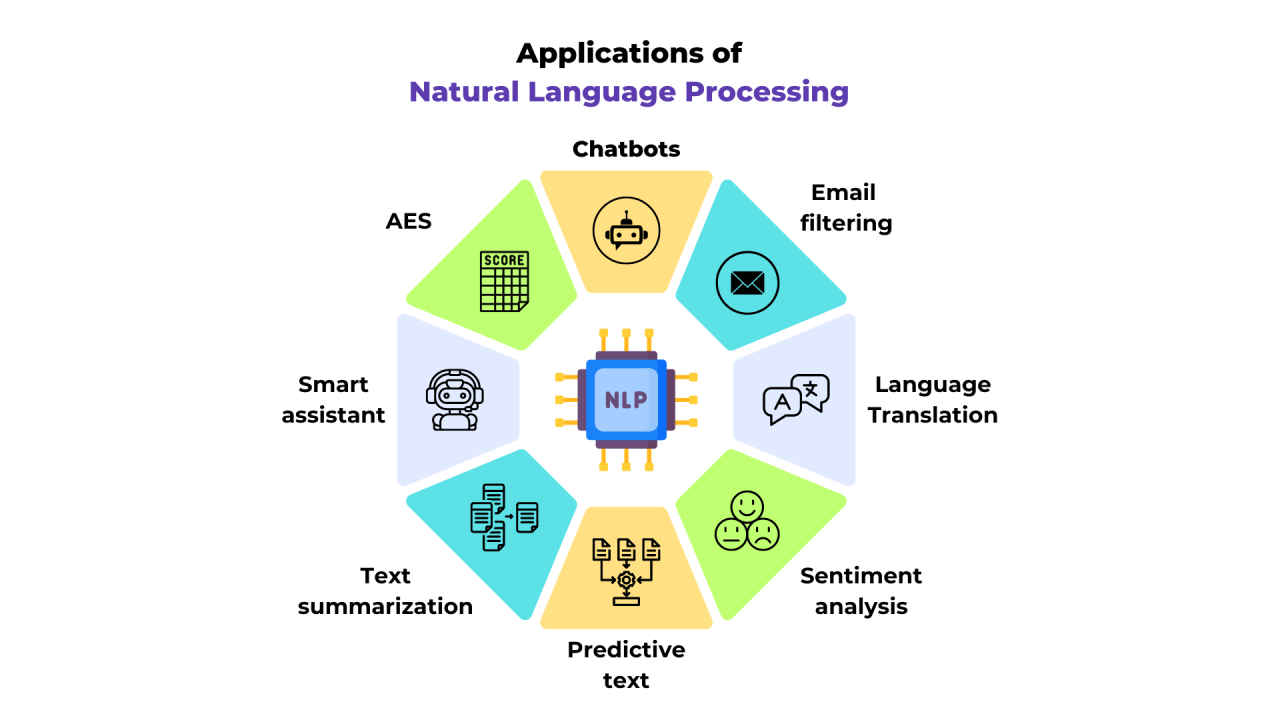

Natural Language Processing (NLP) is rapidly transforming various industries by enabling computers to understand, interpret, and generate human language. This ability to process and analyze vast amounts of text and speech data unlocks significant opportunities for automation, improved decision-making, and enhanced user experiences. Its practical applications are diverse and span across numerous sectors.NLP’s versatility allows it to tackle complex tasks, from simple question answering to nuanced sentiment analysis.

This power is now being harnessed to drive innovation and efficiency in areas ranging from customer service to scientific research.

Real-World Applications in Different Domains

NLP applications are increasingly prevalent across various sectors. Its ability to understand and respond to human language enables automated processes, improved customer experiences, and more accurate data analysis. This versatility extends from simple tasks like answering frequently asked questions to complex analyses like identifying market trends from social media conversations.

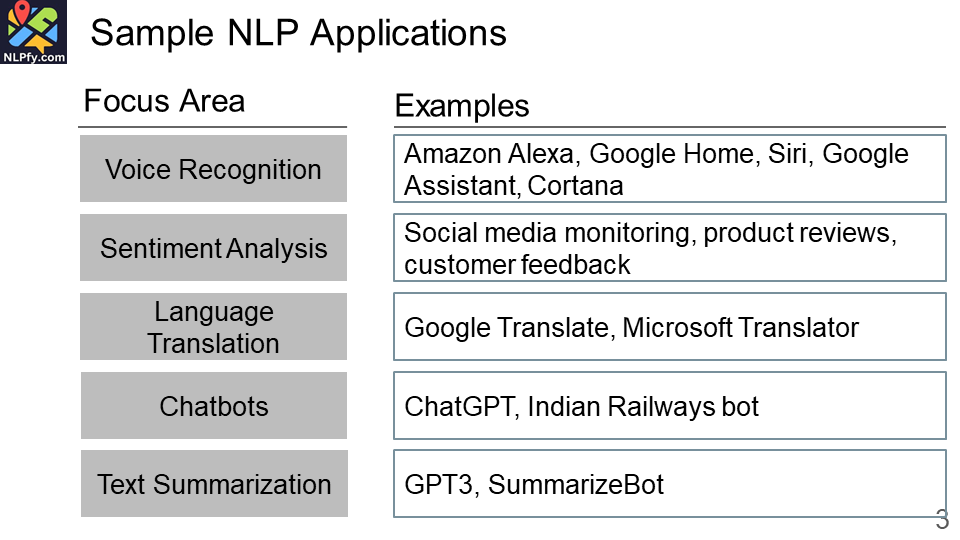

NLP in Chatbots and Virtual Assistants

Chatbots and virtual assistants are prime examples of NLP’s practical applications. These systems leverage NLP techniques to understand user queries, provide relevant responses, and perform tasks. A key feature is their ability to interact with users in a conversational manner, making interactions more natural and efficient. Examples include customer support chatbots that answer common questions, schedule appointments, and provide product information.

NLP in Sentiment Analysis

Sentiment analysis is a crucial application of NLP. It involves determining the emotional tone or attitude expressed in text or speech. This capability is valuable for businesses to gauge customer feedback, understand public opinion, and track brand perception. For example, analyzing social media posts about a product can reveal public sentiment, helping companies adjust their strategies accordingly.

Understanding the sentiment surrounding a topic enables businesses to identify potential issues and react proactively.

NLP in Various Industries

NLP is being implemented across diverse industries, transforming workflows and improving decision-making. Its impact is notable in areas like healthcare, finance, and marketing.

- Healthcare: NLP can automate medical record analysis, assisting doctors in identifying potential diagnoses and treatment options. It also helps in drug discovery and clinical trial analysis.

- Finance: NLP can be used to analyze financial news, market trends, and customer feedback, enabling better investment strategies and risk management.

- Marketing: NLP helps companies understand customer preferences, analyze market trends, and personalize marketing campaigns.

- Customer Service: Chatbots and virtual assistants powered by NLP handle customer inquiries and provide instant support, significantly improving efficiency.

- E-commerce: NLP enables personalized product recommendations, efficient search functionalities, and streamlined customer interactions.

NLP Applications Across Different Sectors

The table below showcases NLP applications across various sectors. These examples illustrate the diverse ways in which NLP is enhancing processes and driving innovation.

| Sector | Application | Example |

|---|---|---|

| Healthcare | Automated medical record analysis, drug discovery | Identifying potential diagnoses from patient records, analyzing clinical trial data to identify effective treatments. |

| Finance | Financial news analysis, fraud detection | Analyzing market trends and identifying potential risks, detecting fraudulent transactions from customer data. |

| Marketing | Sentiment analysis, targeted advertising | Analyzing social media sentiment towards a product, personalizing advertisements based on customer preferences. |

| Customer Service | Chatbots, virtual assistants | Providing instant customer support through chatbots, answering frequently asked questions. |

| E-commerce | Product recommendations, search optimization | Recommending relevant products to customers, improving search results based on user queries. |

Challenges and Future Trends in NLP

Natural Language Processing (NLP) has made significant strides, enabling powerful applications across diverse domains. However, several limitations and challenges remain, particularly concerning data quality, computational resources, and inherent biases. Understanding these obstacles is crucial for developing robust and ethical NLP systems. Future research focuses on overcoming these challenges and exploring new frontiers in NLP.

Limitations and Challenges in NLP

The field of NLP faces several limitations that hinder its widespread adoption and further development. Data quality, a significant challenge, impacts the accuracy and reliability of NLP models. Data often contains inconsistencies, errors, and biases, which can lead to inaccurate or unfair results. Computational resources are another constraint, as training sophisticated NLP models often requires substantial processing power and memory.

Moreover, the inherent biases present in training data can perpetuate and amplify societal biases within the resulting NLP systems. Addressing these issues is critical for building responsible and equitable NLP applications.

Data Quality Issues

Data quality is a fundamental challenge in NLP. Inconsistent data formats, errors, and biases can significantly impact model performance. For instance, if a dataset used to train a sentiment analysis model contains a high percentage of reviews with overly positive or negative expressions, the model may not accurately classify neutral sentiments. Furthermore, insufficient data coverage for specific domains or demographics can lead to poor generalization and limited applicability.

Robust data cleaning, augmentation techniques, and careful data curation are crucial for mitigating these issues.

Computational Resource Constraints

Training complex NLP models often demands substantial computational resources. Deep learning models, for example, require significant processing power, memory, and specialized hardware like GPUs. This can be prohibitive for smaller organizations or researchers with limited access to advanced infrastructure. Consequently, research efforts are directed towards developing more efficient algorithms and models that require less computational resources without compromising accuracy.

Efficient architectures and model compression techniques are essential for overcoming these constraints.

Bias in NLP Systems

NLP systems can inherit and amplify biases present in the training data. If the data reflects societal stereotypes or prejudices, the resulting model will likely perpetuate and even exacerbate these biases in its outputs. For example, an NLP model trained on predominantly male-authored texts might exhibit gender bias when analyzing written text. Addressing this challenge requires careful data curation, bias detection methods, and algorithmic modifications to mitigate the impact of biased data.

Techniques like fairness-aware learning and debiasing methods are being developed to counteract these biases.

Emerging Trends and Future Directions

The future of NLP is marked by several promising trends and research directions. These trends address the challenges Artikeld above and push the boundaries of what’s possible. One key direction is the development of more robust and efficient algorithms, specifically in the area of large language models.

Future Research Areas in NLP

| Trend | Description | Impact |

|---|---|---|

| Explainable AI (XAI) in NLP | Developing NLP models whose decisions can be understood and explained. | Increased trust and transparency in NLP systems. |

| Multimodal NLP | Combining text with other modalities like images, audio, and video to create more comprehensive understanding. | Enhanced understanding and processing of diverse forms of information. |

| Few-shot learning | Developing models that can learn from minimal labeled data, improving efficiency and reducing the need for extensive data annotation. | Accelerated development of NLP models in specific domains. |

| Robustness and Generalization | Ensuring NLP models can perform well on unseen data and handle noisy or adversarial inputs. | Increased reliability and accuracy of NLP systems in real-world applications. |

| NLP for specific domains | Tailoring NLP models and techniques to particular domains like healthcare, finance, and legal, to provide more specific and effective solutions. | Improved accuracy and efficiency of NLP applications in various sectors. |

Epilogue

In conclusion, understanding the fundamentals of Natural Language Processing is crucial for anyone seeking to leverage the power of language in the digital age. This exploration of NLP’s core concepts, tasks, techniques, and applications provides a solid foundation for comprehending how computers can process and understand human language. As NLP continues to evolve, its impact on various industries will only increase, making this knowledge increasingly valuable.